A Summary on SSD & FTL

Summary of study, also put to respective WeiChat articles.

The brief summary to book my SSD and FTL knowledge collected.

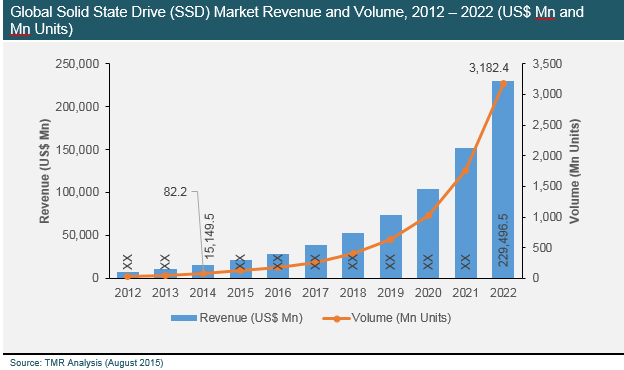

SSD Market Growth

The almost 100% growth speed is why everybody is looking at SSD.

- Source: TMR Analysis (August 2015)

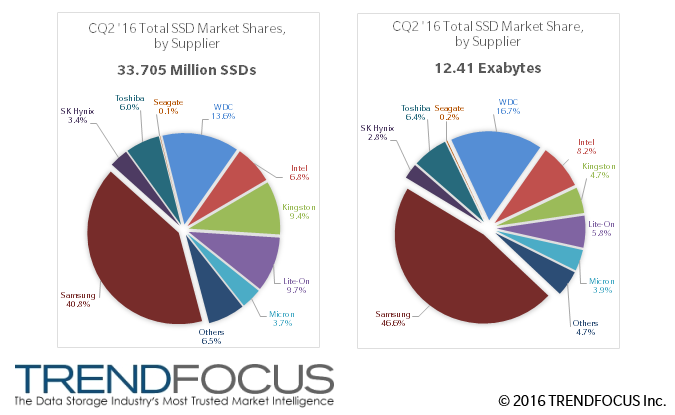

- Source: TrendFocus (2016)

SSD Chip Structure

Flash chip

- 1 Chip/Device -> multiple DIEs

- 1 DIE -> several Planes

- 1 Plane -> thousands of Blocks

- Planes can be parallelly accessed

- 1 Block -> hundreds of Pages

- Block is the unit of erasing

- 1 Page -> usually 4 or 8 KB + hundred bytes of hidden space

- Page is the unit of read and write

- Cells: SLC, MLC, TLC: basic bit storage unit

- Max P/E cycles: MLC from 1500 to 10,000; SLC up to 100,000

References

- Alanwu’s blog: [1668609] [1544227]

- Storage architecture

MLC vs eMLC vs SLC

Cell comparison

- SLC - Expensive. Fast, reliable, high P/E

- MLC - Consumer grade. cost 2- 4x less than SLC, 10x less P/E than SLC, lower write speed, less reliable

- eMLC - Enterprise (grade) MLC. Better controller to manage wear-out and error-correction.

- TLC - Championed by Samsung

Flash Reliability in Production: The Expected and the Unexpected (Google) [2016, 14 refs]

- “We see no evidence that higher-end SLC drives are more reliable than MLC drives within typical drive lifetimes” (not cells)

References

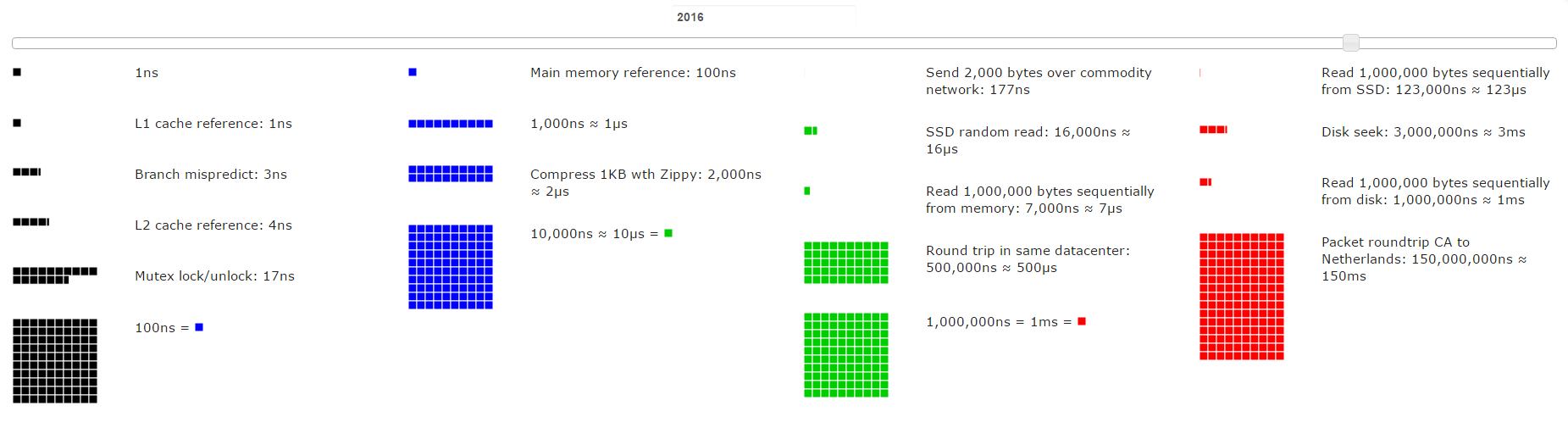

Latency Numbers

Latency Numbers Every Programmer Should Know

- Cache access: ~1ns

- Memory access: ~100ns

- SSD access: ~10μs

- Disk access: ~10ms

- Datacenter net RTT: ~500μs

SSD Interfaces

SSD Interfaces (not including NVRAM’s)

- SATA/SAS

- Traditional HDD interface, now mapped to SSD

- PCIe

- SSD is so fast, SATA/SAS bus speed is not enough.

- Attach SSD directly to PCI bus. Much faster.

- NVMe

- Improved from the PCIe. The native interface for flash media.

References

- What’s the difference between SATA, PCIe and NVMe

- Clarification of terminology: SSD vs M.2, vs PCIe vs. NVMe

FTL - Flash Translation Layer

FTL functionalities

- Interface Adaptor

- Map flash interface to SCSI/SATA/PCIe/NVMe interface

- Bad Block Management

- SSD records its bad blocks at first run

- Logical Block Mapping

- Map logical addresses with physical addresses

- Wear-levelling

- Save the P/E cycles

- Garbage Collection

- Manage garbage collection because of the NAND P/E logic

- Write Amplification

- Avoid writing more actual data than the user input

References

- Understanding FTL

- Alanwu’s blog: [1427101]

FTL - Hybrid-level Mapping

Logical to physical address mapping

- Block-level mapping: too coarse

- Page-level mapping: too much matadata

- Hybrid-level mapping: what is used today

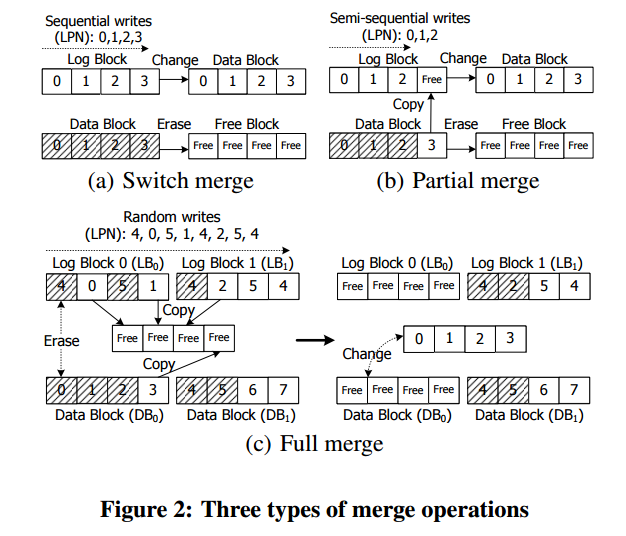

A Space-efficient Flash Translate Layer for Compactflash Systems [2002, 988 refs]

- The beginning paper of hybrid-level mapping FTL

- The key idea is to maintain a small number of log blocks in the flash memory to serve as write buffer blocks for overwrite operations

References

- Alanwu’s blog: [1427101]

FTL - Hybrid-level Mapping (More 1)

A reconfigurable FTL (flash translation layer) architecture for NAND flash-based applications [2008, 232 refs]

- Good paper to introduce flash structures and detailed concepts

- “As an approach that compromises between page mapping and block mapping, … A hybrid mapping scheme … was first presented by Kim et al. [2002]. The key idea …

- To solve this problem of the log block scheme, the fully associative sector translation (FAST) scheme has been proposed [Lee et al. 2006] …

- Chang and Kuo [2004] proposed a flexible management scheme for largescale flash-memory storage systems …

- Kang et al. [2006] proposed a superblock-mapping scheme termed “N to N + M mapping.” … In this scheme, a superblock consists of …”

FTL - Hybrid-level Mapping (More 2)

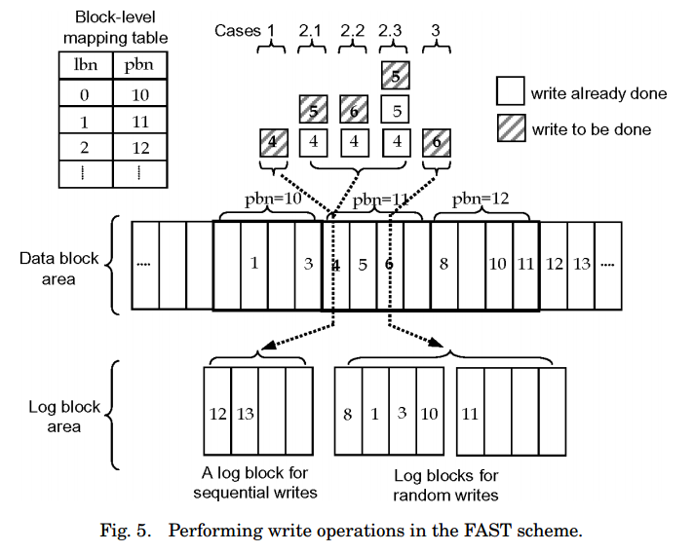

A Log Buffer-Based Flash Translation Layer Using Fully-Associative Sector Translation [2007, 778 refs]

- Usually called as FAST. Improved from “A Space-efficient Flash Translate Layer for Compactflash Systems”

- Key ideas

- In FAST, one log block can be shared by all the data blocks.

- FAST also maintains a single log block, called sequential log block, to manipulate the sequential writes

FTL - Hybrid-level Mapping (More 3)

A Superblock-based Flash Translation Layer for NAND Flash Memory [2006, 368 refs]

- The flash is divided into superblocks, each superblock consist of N data block + M log block

- In this way, set-associative is fully configurable, as from what FAST discussed

- Garbage collection overhead is reduced up to 40%

- Exploit “block-level temporal locality” by absorb writes to the same logical page into log block

- Exploit “block-level spatial locality” to increase storage utilization by that several adjacent logical blocks share a U-block

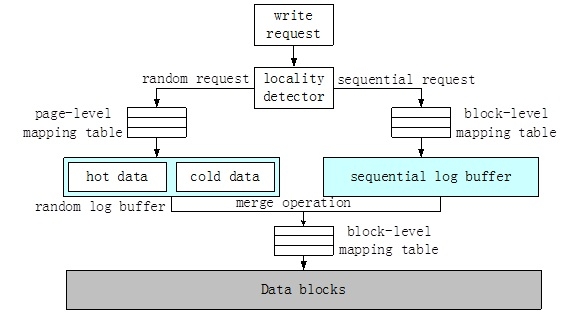

FTL - Hybrid-level Mapping (More 4)

LAST: Locality-Aware Sector Translation for NAND Flash Memory-Based Storage Systems [2008, 298 refs]

- Improved from “A Log Buffer-Based Flash Translation Layer Using Fully-Associative Sector Translation” and “A Superblock-based Flash Translation Layer for NAND Flash Memory”

- Key ideas

- LAST partitions the log buffer into two parts: sequential log buffer and random log buffer

- The sequential log buffer consists of several sequential log blocks, and one sequential log block is associated with only one data block

- Random log buffer is partitioned into hot and cold partitions. By clustering the data with high temporal locality within the hot partition, we can educe the merge cost of the full merge

FTL - Hybrid-level Mapping (More 5)

Implications

- Although FTL does remapping, sequential writes still benefits SSD because it reliefs GC (more switch merges)

Image from: FTL LAST

Bypassing FTL - Open-channel SSD

Though FTL is powerful and dominatedly adopted, there are people who try to get rid of it

- No nondeterministic slowdowns by background tasks of FTL

- Reduce the latency introduced by FTL

- Application customized optimization for flash operation

Open-channel SSD

- SSD hardware exposes its internal channels directly to application. No remapping by FTL

- An Efficient Design and Implementation of LSM-Tree based Key-Value Store on Open-Channel SSD (Baidu) [2014, 25 refs]

- Optimize the LSM-tree-based KV store (based on LevelDB) on Open-channel SSD. 2x+ throughput improvement.

- SDF: Software-Defined Flash for Web-Scale Internet Storage Systems (Baidu) [2014, 67 refs]

- SSD (with FTL) bandwidths ranges from 73% to 81% for read, and 41% to 51% for write, of the raw NAND bandwidths

- Optimizing RocksDB for Open-Channel SSDs

- Control placement, exploit parallelism, schedule GC and minimize over-provisioning, control IO scheduling

- Linux kernel integration

Application-Managed Flash [2016, 6 refs]

- Expose the block IO interface and the erase-before-overwrite to applications

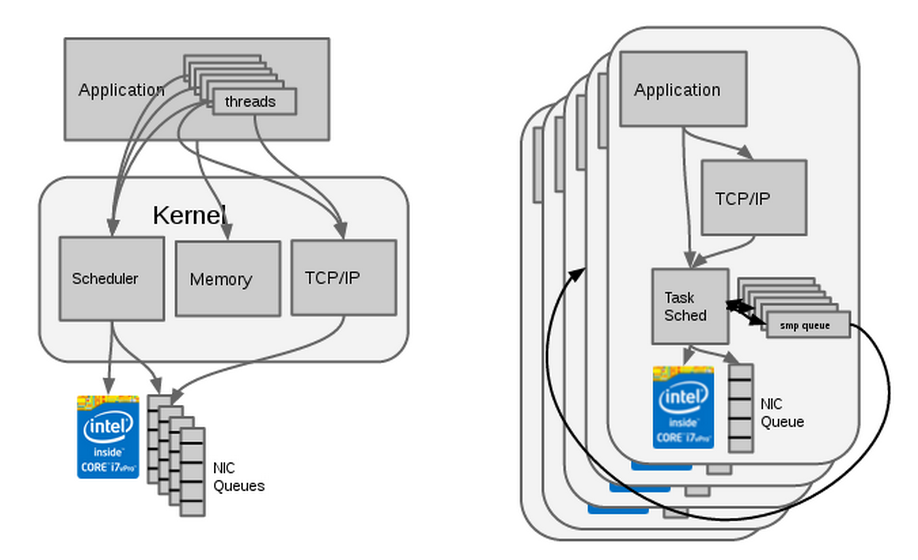

Design Shifts - Seastar

ScyllaDB Seastar

- Original designed to build ScyllaDB, a 10x faster Cassandra. Now opensourced

- FCP async programming: future, promise, completion. Sharded application design.

- User-space TCP/IP stack, bypassing the kernel. By Intel DPDK

- User-space storage stack, bypassing the kernel. By Intel SPDK (WIP)

Rationales Behind

- SSD is so fast, that storage software stack needs to be improved.

-

- Better async programming. FCP. Lockless. Cpu utilization.

-

- SSD is so fast, that Linux kernel is too slow.

-

- Bypass it.

-

- The same ideas are borrowed for NVM too

Image: Tradition NoSQL vs ScyllaDB architecture

References

Design Shifts - DSSD

DSSD - EMC Rack-scale Flash Appliance

- Kernel bypass, directly connect to DSSD appliance

- Similar idea to Seastar

References

- DSSD D5 Data Access Methods

- Software Aspects of the EMC DSSD D5

- DSSD bridges access latency gap with NVMe fabric flash magic

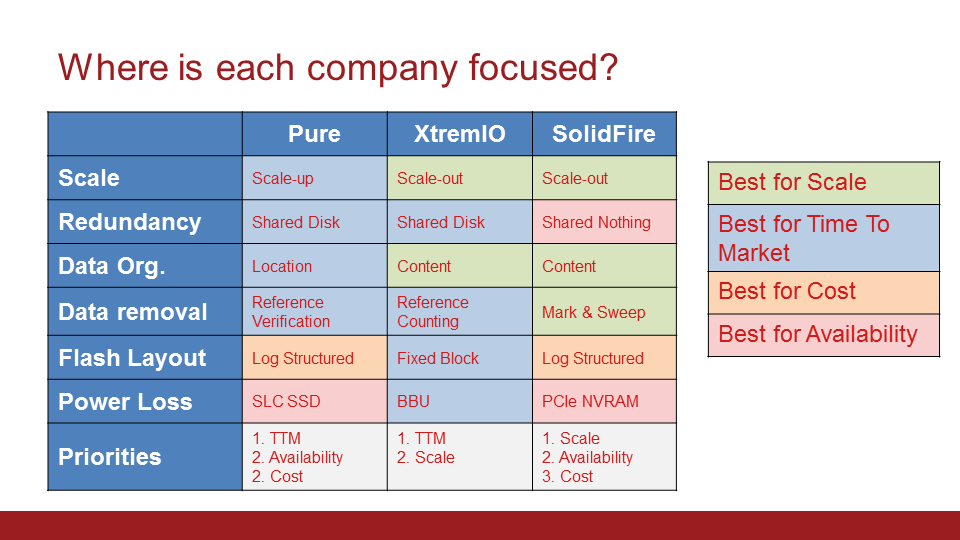

Design Shifts - Content-based Addressing

Content-based Addressing

- Data placement is determined by data content rather than address.

- Leverage SSD random-write ability. Natural support for dedup.

- Adopted in EMC XtremIO and SolidFire

Image: SSD storage array tradeoffs by SolidFire

References

- XtremIO Architecture (The debate is famous)

- Comparing Modern All-Flash Architectures - Dave Wright, SolidFire

- Coming Clean: The Lies That Flash Storage Companies Tell with Dave Wright of NetApp/SolidFire

Design Shifts - LSM-tree Write Amplification

WiscKey: Separating Keys from Values in SSD-conscious Storage [2016, 7 refs]

- In LSM-tree, the same data is read and written multiple times throughout its life, because of the compaction process

- Write amplification can be over 50x. Read amplification can be over 300x.

- Many SSD-optimized key-value stores are based on LSM-trees

- WiscKey improvements

- Separates keys from values to minimize I/O amplification. Only keys are in LSM-tree.

- WiscKey write amplification decreases quickly to nearly 1 when the value size reaches 1 KB. WiscKey is faster than both LevelDB and RocksDB in all six YCSB.

Design Shifts - RocksDB

RocksDB is well-known to be an SSD optimized KV store

- It is developed based on LevelDB, opensourced by Facebook. Optimized in many aspects for SSD.

- RocksDB: Key-Value Store Optimized for Flash-Based SSD

- Why is it flash-friendly?

- Space, Read And Write Amplification Trade-offs. Optimize compaction process.

- Low Space Amplification

- High Read QPS: Reduced Mutex Locking

- High Write Throughput: Parallel Compaction; Concurrent Memtable Insert

- Why is it flash-friendly?

- Universal compaction: “It makes write amp much better while increasing read amp and space amp”

- This is RocksDB’s famous feature

Design Shifts - Ceph BlueStore

Ceph BlueStore

- Develop the single purpose filesystem, BlueFS, to manage data directly on raw block devices

- Use RocksDB to store the object metadata and WAL. Better management for SSD.

- Faster software layer to exploit the high performance of SSD. Similar thoughts from Seastar

References

Design Shifts - RAID 2.0, FlashRAID

Many design points to adapt for SSD

- Failure model, write amplification, random writes, RISL (Random Input Stream Layout), zero-fill, trim, partial-stripe writes, …

References

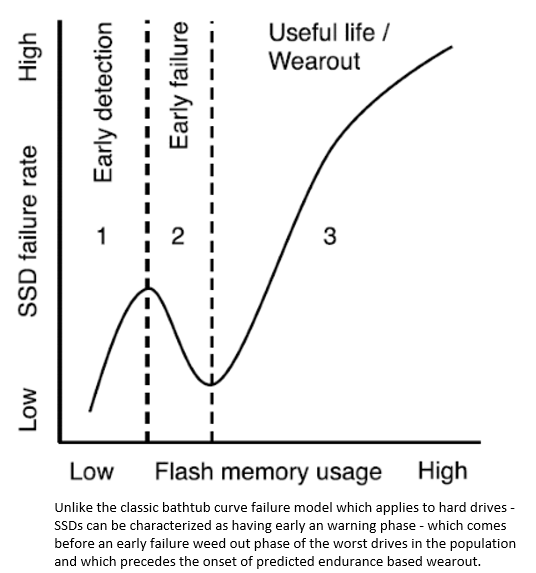

Image from: SSD failure model

Incoming New Generation

Intel 3D XPoint

- Announced in mid-2015 with claims of ten times reduced latency compared to flash

- References

NVMe over Fabrics

- A bit like the flash version of SCSI over SAN

- DSSD’s bus is like a customized verion of NVMe over Fabrics

- References

NVDIMM

- Attach flash to the memory bus. Even faster.

- References

Paper & Material Summary

References

- Reading Fast16 Papers

- FAST summits: FAST16

- Flash Memory Summit

- Storage Tech Field Day

- Alanwu’s blog

- MSST - Massive Storage Systems and Technology

- OSDI - Google likes to publish new systems here: OSDI16

Hints for Reading Papers

Check the “Best Paper Award” on each conferences

- On top-level conferences, they are best of the best

Search for papers with very high reference counts

- E.g. 1800+ for industry reformer, 900+ for breakthrough tech, 200+ for big improvement tech.

- 10+ refs on the first year indicates very good paper

Search for papers backed by industry leaders

- E.g. Authored by/with Google, Microsoft, etc

Some papers have extensive background introduction

- It is very good for understanding a new technology

You may even hunt on college curriculums. They help grow solid understanding.

- E.g. From where Ceph’s author graduates

Create an Issue or comment below