A Holistic View of Distributed Storage Architecture and Design Space

The article summarizes my experiences on software architecture. Architecture design is essentially driven by philosophies as the generator engine that governs all knowledge. From the organization view, we can find why and how architecture design process and skills are required that way. Common methodologies and principles, viewed from the philosophies, provide guidance to carry out architecture design with quality. An architect needs an armory of techniques for different system properties. I categorized Reference architectures in each distributed storage area, summarize architecture design patterns from them, and connect them into technology design spaces.

Table of Contents

- Software architecture - A philosophy perspective

- Why need software architecture

- Different architecture organization styles

- Key processes in software architecture

- Key methodologies in software architecture

- Common architecture styles

- General architecture principles

- Technology design spaces - Overview

- Technology design spaces - Breakdown

- Conclusion

Software architecture - A philosophy perspective

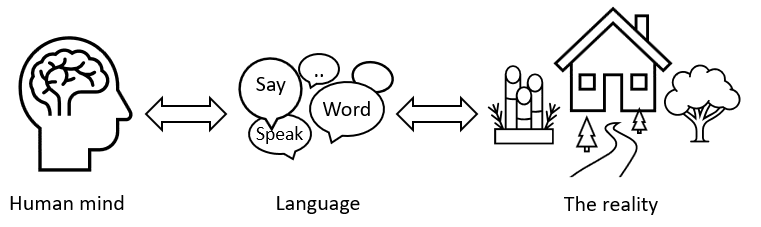

Software architecture is a modeling of the reality world, a language, and a human mind creation to assist human mind. Language is an interesting topic. The three together are deeply interconnected, pointing why, what and how to handle software architecture.

The next and following chapters tell about knowledge in software architecture. But this first chapter tells about the engine that generates the knowledge.

Reality, language, and human mind

Firstly, the modeling of the world is human language. Human language evolved for thousands of years, enriched by distinctive civil culture, polished by daily interaction among population, and tested by full industry usage and creation. Grab a dictionary, you learn the world and mankind.

Next, the modeling tool is also a model of the modeler itself. I.e. human language is also the modeling of human mind. Thinking is carried and organized by language. Language is structured in the way how human mind is capable to perceive the world, rather than how necessarily the world itself has to be. E.g. software designs by high cohesion low coupling, which is also the principle of how words are created in language. Like they are to reduce software complexity, they do because human thinks this way.

We can say human language, mind, and the perceivable reality are isomorphic (of the same structure). The expedition into the outer world is the same way with exploring into the deep heart. Losing a culture, a language, is the same with losing a piece of reality. As the two sides of a coin, human language is both the greatest blessing how mankind outperforms other creature beings, and also the eternal cage how farthest human mind is capable to perceive.

About software architecture

Software architecture is a language, a modeling of the reality world, and a human mind creation to assist human mind. The essence of software architecture is to honestly reflect the outer world, to introspect into the inner mind, and to conceptually light up where it is dark missing. The answer is already there, embedded in the structure, waiting to be perceived.

The question is not what software architecture itself is, nor to learn what software architecture has, but to understand the landscape of world and mind, where you see the hole that needs “software architecture” to fill. You predict and design what “software architecture” should be, can be, and will be. There can be three thousands parallel worlds each with a different software architecture book. We pick one of ours.

Besides, knowledge and experience are themselves good designs. They are essentially a domain language, a reusable piece of world modeling, thus also explains why they are useful across daily work and even substitutes design skills. Knowledge is not to learn, but to observe the art of design tested by human act.

Side notes: Explaining with examples

For “high cohesion low coupling” in human language, imagine an apple on a disk. People name them with “apple” and “desk”, rather than a “half apple + half desk” thing. Like selecting what to wrap into an object in Object-Oriented (OO) design, the naming “apple” and “desk” practices “high cohesion low coupling”.

To drill deeper, “high cohesion” implies “going together”. The underlying axis is time, during which the apple goes with itself as a whole. The edges of the apple and the desk intersect, but they have different curves, and they can be decoupled (separated if you move them). Another underlying axis is space. Human senses apple and desk with basic elements like shape and color. These sense elements grow on axes of time and space, to be processed into human language. The processing principles look like those from software design, or to say, software design principles are crafted to suit human mind.

An imagined creature can have a totally different language system and thinking mind, if they do not rely on visual sights like human, or even not with time and space axes. They may not need “high cohesion low coupling” as a thinking principle neither. E.g. they can process information like how organic biology evolves.

For human language is also a cage, remember language is a modeling of the reality. Modeling implies “less important” information are dropped to ease the burden of human cognition. Are they really less important? Words are to reuse the same concept for events happened at different time, which avoids duplication. But are they really duplicates? The necessity of language is itself a sign that human mind is unable to process “full” information. Relying on language, the ability is crippled, limited, caged.

More, human mind can hardly think without underlying time and space axes. Human words, at the bottom layer of the abstraction tower, can hardly go without “human-organ-oriented” sense elements. People frequently need daily chats, to sync drifts on abstract concepts. Even language itself is becoming a bottleneck, between human-to-machine, population-to-population information exchange.

For “software architecture” hole in the world and mind landscape, you all see more in the following article. Though most associate “software architecture” with technology, it is also determined by organization and process needs. Various “needs” in different domains flow into the gap of “software architecture”, crafted to be processed and expressed in a suitable language for human mind. Together they evolve into the internal meaning of “software architecture”.

For predict and design what “software architecture” should be. It can be explained as the method of learning. The plain way is the learn what it is, the structure, the composition, cover the knowledge points, and practice using. The better way is to first understand the driving factors, landscape, and dynamics behind. You can see the source and direction of it, even to the end and final limitation. You can also see the many different alternatives, possible to happen, but eventually not chosen by the real world industry, due to certain reasons underwater. You should be able to define your own methodology, given your local customized needs. You can forget the knowledge and create any on your own.

Why need software architecture

There are various aspects why software architecture is necessary, besides technology. These aspects together define what software architecture should be, and correspondingly the methodology and knowledge landscape developed.

Technology aspects

-

Handling the complexity. Software design are separated into architecture level, component level, and class level. Each level popularize with own techniques: 4+1 view, design patterns, refactoring. Any challenge can be solved by adding one layer of abstraction.

-

Decide key technology stack. Internet companies commonly build services atop opensource stacks across different domains, e.g. database, caching, service mesh. Which stack to use affects architecture, and are often evaluated with technology goals and organization resources.

-

Cost of faults. The cost of correcting a fault at early design is way lower than at full-fledged implementation, especially the architecture level faults that need to restructure component interconnects.

Capturing the big

-

Non-functional requirements. Typically, availability, scalability, consistency, performance, security, COGS. More importantly, possible worst cases, how to degrade, critical paths. Also, testability, usability, quality, extensibility, delivery & rollout. They are not explicit customer functional needs, but usually more important, and touches wide scope of components to finally implement.

-

Capturing the changing and non-changing. Architecture design identifies what changes quickly, and what can be stable. The former is usually localized and encapsulated with abstraction, or outsourced to plugin. The later is designed with an ask “can this architecture run 1/3/5 years without overhaul”, which usually reflects to components and interconnections.

-

Issue, strategy, and decision. Architecture is where to capture key issues in system, technology, organization. Strategies are developed to cope with them. And a explicit track of design decisions are documented.

-

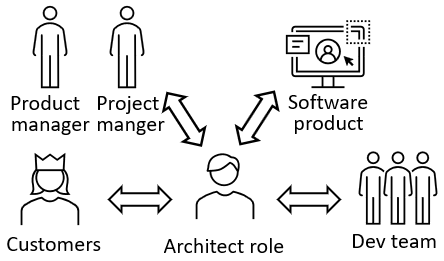

Clarify the fuzziness. At architecture step, not uncommonly the customer requirements are unclear, problems are complex and clouded, future is unstable, and system scope is unknown. The architect role analyzes, defines, designs solution/alternatives, and builds consensus across teams.

-

Capture the big. Architect role needs to define what system properties must be grasped in tight control throughout project lifecycle. They map to the project goals of success and key safety criteria. More importantly, architect role needs to decide what to give up, which may not be as easy as it looks, and reach consensus across teams.

Process & Organization

-

Project management. Architecture step is usually where the cost effort, touching scope, delivery artifact, development model; and resource, schedule, quality can be determined and evaluated. It is also where to closely work with customers to lock down requirements. Project management usually works with the architect role.

-

Review and evaluation. Architecture step is usually where the key designs are reviewed; the key benefit, cost, risk are evaluated; throughput capacity breakdown are verified; and all user scenarios and system scenarios are ensured to be addressed. This usually involves stakeholders from different backgrounds and engage with senior management.

-

Cross team collaboration. Architecture touches various external systems and stakeholders. It is when to break barrier and build consensus cross teams or BUs. It is when to ensure support and get response from key stakeholders. It is where to drive collaboration. Unlike technology which only involves oneself, driving collaboration can be a larger challenge.

-

Tracks and lanes. The architect role usually builds the framework, and then the many team members quickly contribute code under given components. It sets tracks and lanes where the code can grow and where not, i.e. the basis of intra-team collaboration. Future, the tracks and lanes are visions for future roadmap, and standards for team to daily co-work.

Different architecture organization styles

What an architect role does and means in real world industry are somehow puzzled. From my experience, this is due to architecture step is organized differently at different companies. At some, architect is the next job position of every software developer. At some others, I didn’t even see an explicit architect job position.

Architect the tech lead

Usually seen at Internet companies. The architect role is taken by a senior guy in the team, who masters technology stacks and design principles. The architect makes decision on which technology stack to use, and builds the framework for the following team members to fill concrete code. The architect role is in high demand, because Internet companies quickly spin up App after App, each needs its architect, while the underlying opensource infrastructure is relatively stable. Both the business value and technology stack win traction. The API richness in upper App level implies more products and components to host new architects, while infra level generally has simpler API and honors vertical depth.

Architecture BU

BU - business unit, i.e. department. Usually seen at Telecom companies. Architects work with architects, software developers work with software developers; they reside at different BUs. The architecture results are handed off in middle, following a waterfall / CMMI model. The architecture designs on more stable, even standardized requirements, with very strict verification, and delivers completeness of documentation. Strong process, and expect more meetings bouncing across BUs. Employees tend to be separated into decision making layer and execution layer, where the later one expects long work, limited growth, and early retire.

Peer-to-peer architect

Usually seen at teams building dedicated technology. Unlike Internet companies spinning up Apps horizontally atop many different technologies, such team vertically focuses on one, e.g. to build a database, a cloud storage, an infrastructure component, i.e. 2C (former) vs 2B (later) culture. No dedicated architect job position, but shared by everyone. Anyone can start a design proposal (incremental, new component, even new service). The design undergoes a few rounds of review from a group of senior guys, not fixed but selected by relevance and interest. Anyone can contribute to the design, and can join freely to set off with project development. Quite organic. Technology is the key traction here, where new architecture can be invented for it (e.g. new NVM media to storage design).

System analyst

Usually seen at companies selling ERP (Enterprise resource planning), or outsourcing. The systems are heavily involved into customer side domain knowledge. And the domain knowledge is invalidated when selling to another customer from a different domain. Because of new background each time, comprehensive requirement analysis and architecture procedures are developed. When domain can be reused, domain experts are valued, where knowledge and experience themselves are good designs. Domain knowledge can win more traction than technology, where the later one more leans to stability and cost management.

Borrow and improve

Usually seen at follower companies. If not edge cutting into no man’s land, reference architecture (top product’s architecture) can usually be found to borrow from, to customize and improve. This is also benefited by the wide variety of opensource. Reference architecture, standing on the shoulder of giants, are widely used in software architecture processes, e.g. comparing peer works, which is another example of knowledge and experience themselves are good designs. Market technology investigation survey are high demanding skills.

Key processes in software architecture

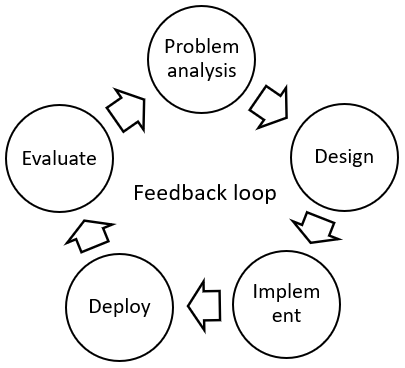

How to successfully drive the software architecture process? It involves various collaboration with upstream and downstream, identify the scope, and break barrier and build consensus. Problem analysis and implementation deployment are connected with data driven evaluation, to compose the continuous feedback loop to drive future evolution.

Knowledge and skills

As preparation, architecture design requires below knowledge and skills

-

Downstream, understand your customer. The customer here also includes downstream systems that consume yours. Know customer to capture key aspects to prioritize in architecture, and more importantly what to de-prioritize (E.g. favor latency over cost? Is consistency and HA really needed?). It helps identify the risks (E.g. festival burst usage, backup traffic pattern). Besides, well defining customer space reveals future directions the architecture can evolve.

-

Upstream, understand what your system is built atop. A web App can be built atop a range of server engines, service mesh, database, caching, monitoring, analytics, etc. Mastering the technology stacks is necessary for designing architecture that works with the practical world, and for choosing correct technology stacks that suit project goals and team capabilities.

-

Externally, understand the prior of art. To design a good system, you need to know your position in the industry. Reference architecture can be discovered and borrowed from. Existing technology and experience should be leveraged. E.g. given the richness of opensource databases, designing a new data storage is even a selection and cropping of existing techniques. Participating in meetups helps exchange industry status, and to ensure your design is not drifting away into a pitfall.

-

Internally, understand your existing systems. Understand the existing system to make designs that actually work, and to correctly prioritize what helps a lot and what helps little. Learn from past design history, experience, and pitfalls, to reuse and go the right path.

-

Organizationally, broaden your scope. Architecture design involves interacting with multiple external systems and stakeholders. Be sure to broaden your scope and get familiar with them. Communicate with more people. Solid soft skills are needed for cross team / BU collaboration, to break barrier and build consensus, and to convey with action-oriented points, concise, big picture integrated with detailed analysis.

Carry out the steps

I lean more to peer-to-peer architect style mentioned above. Many can be sensed from GXSC’s answer. At each step, be sure to engage talk with different persons which significantly improves design robustness. Rather than the design results, it’s problem analysis and alternative trade-off analysis that weight most.

-

Firstly, problem analysis. Design proposal starts from innovation. Finding the correct problem to solve is half-way to success. The cost and benefit should be translated to the final market money (Anti-example: we should do it because the technology is remarkable. Good-example: we adopt this design because it maps to $$$ annual COGS saving). The problem scope should be complete, e.g. don’t miss out upgrading and rollout scenarios, ripple effect to surrounding systems, or exotic traffic patterns that are rare but do happen in large scale deployment. Risk should be identified; internally from technology stacks, externally from cross teams, market, and organization. The key of management is to peace out risks, same with managing the design.

-

One important aspect from problem analysis is prioritization. Architecture design, even the final system, cannot address each problem. You must decide what to discard, what to suppress, what to push down to lower level design, what choices to make now and what to defer, what to push into abstraction, what to rely on external systems, what to push off as future optimization; and to decide what are the critical properties you must grasp tightly throughout the project lifetime and monitor end-to-end. I.e. the other key of management is to identify the critical path. Prioritization are usually determined by organization goals, key project benefits and costs, and the art to coordinate across teams.

-

Next, find alternatives. To solve one problem, at least two proposals should be developed. Trade-off analysis is carried out to evaluate the Pros and Cons. Usually, Pros yet have special cases to make it worse, and Cons yet have compensations to make it not bad. The discussion is carried out across team members, up/downstream teams, stakeholders, which may in turn discover new alternatives. The process is iterative, where the effort is non-trivial, multiplied, because it’s not developing one but a ripple tree of solutions. Eventually you explored the completeness of design space and technology space, and reached consensus across team. Choosing the final alternative can be carried out with team voting, or with a score matrix to compare.

-

Review with more people. Firstly, find one or two local nearby guys for early review, to build a more solid proposal. Next, find senior and experienced guys to review, to make sure no scenarios are missing, all can be reused are reused, and the solution is using the best approach. Then, involve key upstream guys, to ensure required features, load level, and hidden constraints, are actually supported; and to ensure their own feature rollout won’t impact yours. Involve key downstream guys, to ensure the new system addresses what they actually want. It’s important to involve key stakeholders early; make sure you gain support from organization, you deliver visibility, and you align with high level prioritization.

-

Then evaluation for the architecture design. Make sure the problem analysis, every customer scenario and system scenario, and project goals, are well addressed. Make sure non-functional requirements are addressed. Make sure the key project benefit and cost are verified in a data driven approach, with actual production numbers as input, using a prototype, simulation tools, or math formulas to model. Make sure the system can support required load level, by breaking down throughput capacity into each component. Make sure the system handles the worst case and supports graceful throttling and downgrade. Make sure the logic has completeness; e.g. when you handle a Yes path, you must also address No path; e.g. you start a workflow, you must also handle how it ends, go back, interleaved, looped. Make sure development and deliver are addressed, e.g. how to infra is to support multi-team development, the branching policy, component start/online/maintenance/retire strategies, CI/CD and rollout safety. Also, make sure hidden assumptions and constraints are explicitly pointed out and addressed.

-

Finally, it’s the documentation. On practice, it involves a short “one pager” document (actually can be < 20 pages), and slides for quick presentation, and spreadsheets for data evaluation. Nowadays culture lean more to lightweight document, central truth in codebase, and prioritize agile and peer-to-peer communication. Problem analysis and alternative trade-off analysis usually weight more in document than the design itself, where defining the problem space is a key ability. It transforms complex muddy problems into breakdown-able, executable, measurable parts. Architecture design part usually includes key data structure, components, state machines, workflows, interfaces, key scenario walkthrough, and several detailed issue discussion. Importantly, the document should track the change history of design decision, i.e. how they reach today, and more specifically the Issue, Strategy, Design Decision chain.

-

Another output of architecture design are interfaces. Interface design does have principles (see later). They are the tracks and lanes where following development start. They reveal how components are cut and interactions to happen. They also propagate expectations of your system to external systems, such as how they should co-work, what should be passed.

Designed to evolve

Architecture is designed to evolve, and prioritized to make it evolve faster. Ele.me Payment System is a good example in a 5 year scope. Competency of nowadays software depend on the velocity it evolves, rather than a static function set.

-

Simple is beauty. Initial architecture usually only address key requirements. What changes and not changes in several year’s scope are identified and addressed with abstraction. MVP is a viable first deployment, after which it yet becomes challenging how to “replace wheels on a racing van”.

-

Highway is important. Functionalities in software resembles to tall buildings in a city, where highways and roads are key how they build fast. These architecture aspects are less visible, usually under prioritized, but are life critical. Inside the system, they can be the debugability, logging, visibility and monitoring. Have they defined quality standards? Do monitoring have more 9s when the system is to be reliable? From infrastructure, they can be the tooling, platform, config system, fast rollout, data obtaining convenience and analytics, scripting. At organization level, they can be the team process and culture to facilitate agile moves. Externally, they can be the ecosystem and plugin extensibility. E.g. Chrome Apps with plugins designed as first-class. E.g. Minecraft published tools to build 3rd-party mods. E.g. Opensource Envoy designs for community engagement from day 1.

-

Build the feedback loop. Eventually after project rollout and deploy, you should be able to collect data and evaluate the actual benefit and costs. New gaps can be found, and yet facilitate a new round of design and improve. How to construct such feedback loop with data driven should be taken into consideration of architecture design.

Driving the project

The last point is about driving the project. The architect role is usually accompanied with ownership, and be responsible to the progress and final results. Driving goes not only the architecture step, but also along with entire project execution. Many can be sensed from 道延架构 article.

-

There can be timeline schedule issues, new technical challenges, new blockers, more necessary communication with up/downstream; previous assumptions may not hold, circumstances can be changed, new risks will need engage; there can be many people joining and many needs to coordinate, and many items to follow up.

-

Besides the knowledge and communication skills, driving involves the long time perseverance, attention, and care. The ability to find real problems, to prioritize and leverage resources, to push, the experiences, and the skillset of project management, are valued. To drive also means to motivate team members to join and innovate. The design becomes more robust, completed, improved, with more people help; and with people from different perspectives to look.

-

More, driving is a mindset. You are not who asks questions, people ask questions to you, and you are the final barrier to decide whether problem is solvable or not. The most difficult problems naturally routes to you. If solving the problem needs resource, you make the plan and lobby for the support. You make prioritization, you define, and you eat the dogfood. The team follow you to success (if not otherwise).

Key methodologies in software architecture

Software architecture is a large topic that I didn’t find a canonical structure. I divide it into process (above), methodologies (this chapter), principles, system properties and design patterns, technology design spaces. The article is organized as it.

-

Process. Already covered in the above chapters. It involves how real world organizations carry out architecture design, and conceptually what should be done for it.

-

Methodologies. The analysis method, concept framework, and general structure, to carry out architecture design. They also interleave with principles and philosophies. Methodologies change with culture trends, organization styles, and technology paradigms. But throughout the years, there are still valuable points left.

-

Principles. Architecture level, component level, class level each has many principles, common or specific. Essentially they are designed to reduce mind burden, by letting the code space to mimic how human mind is organized.

-

System properties and design patterns. Distributed systems have non-functional properties like scaleout, consistency, HA (high availability). Various architectural design patterns are developed to address each. They are the reusable knowledge and domain language. Best practices can be learned from reference architecture, more market players, and historical systems; where architecture archaeology systematically surveys through the past history of systems.

-

Technology design spaces. A technology, e.g. database, can evolve into different architectures after adapting to respective workload and scenarios, e.g. OLAP vs OLTP, in-memory of on disk. Exploring the choices and architectures, plotting them on the landscape, reveals the design space. With this global picture in mind, the design space landscape greatly helps navigating the new round of architecture design.

Managing the complexity

The first and ever biggest topic in architecture design (or software design) is to handle complexity. The essence is to let the code space mimic human mind, i.e. how the human language is organized (if you have read the philosophy chapter). Human language is itself the best model of the complex world, which is a “design” polished by human history, and yet shared by everyone. Domain knowledge is thus helpful, as it is the language itself. When code space is close to the language space (or use a good metaphor), it naturally saves everyone’s mind burden.

Below are conceptual tools to handle complexity.

-

Abstraction. Any challenge can be solved by adding one layer of abstraction. The tricky part is you must precisely capture, even predict, what can change and what not. It’s non-trivial. E.g. for long years people tried to build abstract interface across Windows API and Linux API, but today what we have is “write once glitch somewhere”. You still need to examine down the abstraction tower to the bottom. Because coding interface cannot constraint all hidden assumptions, and non-functional properties e.g. throughput and latency, compatibility. Information in the flow can become missing and distorted, after passing along the abstraction tower, resulting in incorrect implementation.

-

Information flow. Typical design captures how code objects flow around the system. But instead, you should capture how information described in human language flow around the system. Language information is symmetric at the sender and receiver components, but the implementation and representation varies (e.g. you pass “apple” across the system, rather than DB records, DAO, bean objects, etc). Dependency is essentially a symmetry, where there is possibly no code references, but semantics linked (e.g. apple has color “red”, that’s where everywhere of your system must handle correctly). Language information carries the goal, which the code should align to, i.e. the code should align to the human language model. Human language is consistent compared to the code objects passing in the system; the later one becomes the source of bug when misalignment happens at different layers of system. The design principle eventually leads to “programming by contract”, “unbreakable class” (a component should work, with no assumptions to the outside, regardless what the caller passes into), semantics analysis; but more to learn from.

-

High cohesion low coupling. Human concepts, or say words in language, are all constructed following the rule of “high cohesion low coupling”. This is how human mind works, and to follow which, the code design saves mind burden. The topic is related to change and dependency. High cohesion encapsulates changes, which localizes code modification impact. Changes pass along the wire of dependency, that’s why low coupling works to reduce undesired propagation. Good encapsulation and delegation requires to predict future changes, which is usually not easy; instead of adding unnecessary OO complexity, it oppositely leads to another KISS design.

-

Name and responsibility. The most difficult thing in software design is giving names. It’s not to say fancy names are hard to find, but to say, being able to name something means you have already grouped the concept in a high cohesion way (e.g. you can name “apple”, “desk”, but cannot name “half apple + half desk”), which inherently leads to good design. Next, a name defines what a thing is, is not, can do, and cannot do; that’s the responsibility. Saying objects should call be their names, is to say objects should call by interfaces and responsibility. Finally, when you can describe the system with fluent human language, i.e. with good names and information flows, you are naturally doing the good design. To do it better, you can organize the talk with consistent abstraction levels, rather than jumping around; if so, it means the design abstraction levels are consistent and self-contained too. Remember design is a modeling to human language (if you have read the philosophy chapter).

-

Reuse. If a component is easy to reuse, it naturally follows high cohesion and good naming responsibility. Design for reuse is recommended, but avoid introduce extra encapsulation and delegation, which results in high OO complexity. Refactor for reuse is recommended, but refactor usually requires global picture knowledge, which contradicts with the goal that changes should be localized. Reference architecture is another reuse to reduce mind complexity. Find the top product and opensource to learn from. Find the popular framework which teaches good designs. The past experience here becomes its domain knowledge, shared by team members, and changing points are more predictable.

-

Separate of concerns. Divide and conquer, decomposition, are the popular concepts. Decouple on the boundary of minimal dependency links. Make components orthogonal from each own space. Make API idempotent from timeline of calls. To truly separate concerns, methodologies are naturally required such as encapsulation, knowledge hiding, minimal assumptions. In theory, any complexity can be broken down into handy small pieces, but beware of the information flow distorted in between, and the missing holes in responsibility delegating.

-

Component boundary. Separating components and sub-components eases mind memory usage. Component boundary should be cut at what changes together. If an upstream service change is frequently coupled with a downstream service change, they should have been put into the same component. Violating it is the common case where microservice messes up the system. High organization collaboration cost is another place to cut component boundary, see Conway’s Law.

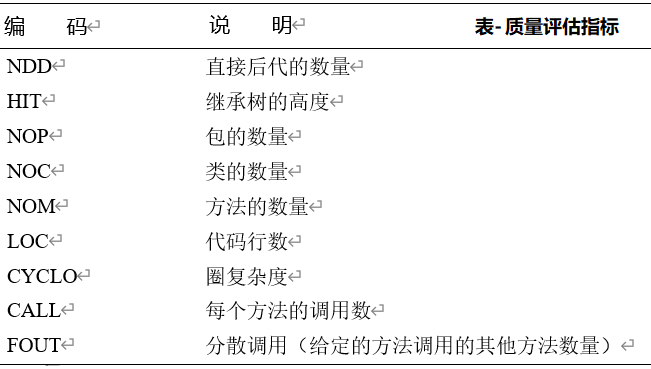

Design complexity can be formulated and evaluated using scores on dependency. I found D Score interesting. And this Measuring software complexity article lists other measures. These methods are less popular probably because domain knowledge is more effective to handle complexity. Besides the below bullets, another Domain-drive Design - Chapter 1 article has a nice list of code complexity measurement.

-

“D Score” measures software complexity by the number of dependencies. Dependency links inside the component is adding to cohesion, otherwise adding to coupling if pointing to outside. The two types of dependency links are summed up, with a formula, as the final score.

-

“Halstead Metrics” treat software as operators and operands. The total number of operators and operands, unique numbers, and operand count per operator, are summed up, with a formula, as the final score.

-

“Cyclomatic Complexity” treat software as control flow graph. The number of edges, nodes, and branches, are summed up, with a formula, as the final score.

Levels of architecture design

Software design is complex. To manage the complexity, I break it into different levels and views. Typical levels are: architecture level, component level, and class level. The abstraction level goes from high to low, scope from big to small, and uncertainty from fuzzy to clear. Each level yet has its own methodologies. Levels also map to first-and-next steps, which in practice can be simplified or mixed, to lean more to the real world bottleneck.

-

Architecture level focuses on components and their interconnections. Interconnections are abstracted by ports and connectors. A component or connector can hide great complexity and to delay technical decision to lower levels. A component can be a metadata server, a storage pool, or with distributed caching. A connector can be a queue with throttling QoS, REST services, or an event-driven CQRS. System scope is examined, e.g. input and output flows, how to interact with end users, and the up/down stream systems. The infrastructure and technology stack to build atop can be investigated and determined. Non-functional requirements, user scenarios, and system scenarios are captured and addressed in this level. The typical analysis method is 4+1 View. When talking about software architecture, more are referring on this level. It is also what this article to cover.

-

Component level follows the architecture level. It focuses on the design inside the component. The scope should also be defined, e.g. interface, input and output, execution model and resources needed. This level usually involves tens of classes, Design Patterns are the popular methodology, and component should be designed Reusable. Architecture can be built on existing systems, where technical debt plays a role, e.g. to rewrite all with a better design (high cost), or to reuse by inserting new code (high coupling).

-

Class level next focuses on the more fine-grained level, i.e. how to implement one or several classes well. The definitions are clear and ready for coding. Typical methodologies are Coding Styles, Code Refactoring, Code Complete (bad book name). You can also hear about defensive programming, contract based programming. UML diagrams are vastly useful at this level and also component level, as a descriptive tool, and more importantly an analysis tool; e.g. use state machine diagram/table to ensure all possible system conditions are exhausted and cared about. (Similar methods are also shared in PSP, which is a subset (Combining CMMI/PSP) of CMMI; real world today more lean to Agile, while CMMI essentially turns developers into screw nails with heavy documentation and tightly monitored statistics).

Views of architecture design

Views help understand software design from different perspectives. The methodologies covered below act as the descriptive tools for design output, the analysis tools to verify correctness, the heuristic frameworks for mind flow, and the processes to carry out architecture design.

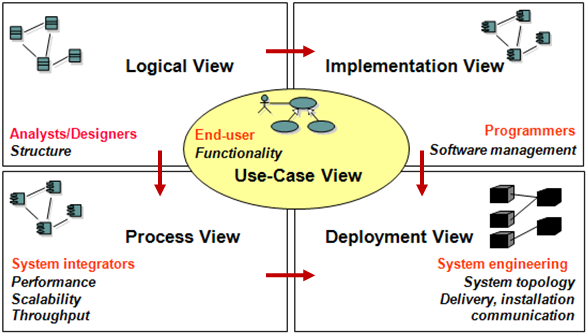

4+1 View is one of the most popular software architecture methods. It captures the static structure, runtime parallelism, physical deployment, and development lifecycle.

-

Logical View: The components and their interconnections. The diagram captures what consists of the system and how it functions, the dependencies and scope, and the responsibility decomposition. It’s the most commonly mentioned “what architecture is”.

-

Process View: Logical View is static, while Process View captures the runtime. Performance and scalability are considered. Examples are how multi-node parallelism and multi-threading are organized, how control flow and data flow co-work in timeline.

-

Deployment View: E.g. which part of the system runs at datacenter, CDN, cloud, and client device. How should the rollout and upgrade be managed. What are the binary artifacts to deliver.

-

Implementation View: Managing the code, repo, modules, DLLs, etc. Logical View components are mapped to concrete code objects, that developers and project manager can readily work on. It also covers the branching policies and how different versions of the product should be maintained.

-

Usecase View: Last but the most important view. It captures all user scenarios and system scenarios, functional and non-functional requirements. Each of them are walked through across the previous 4 views to verify truly addressed.

UML diagrams is the generic software modeling tool, but it can also be understood from the view’s perspective.

-

Structural diagrams capture the static view of the system. From higher abstraction level to lower, there are Component diagram, Class diagram, Object diagram, etc.

-

Behavioral diagrams capture the runtime view of the system. E.g. Activity diagram for the logic workflow, sequence diagram for timeline, Communication diagram for multi-object interaction, and the state diagram everyone likes.

-

Other diagrams. There are Usecase diagram to capture user scenarios and more fine-grained cases; and deployment view to capture how the code and artifacts are grouped for code development.

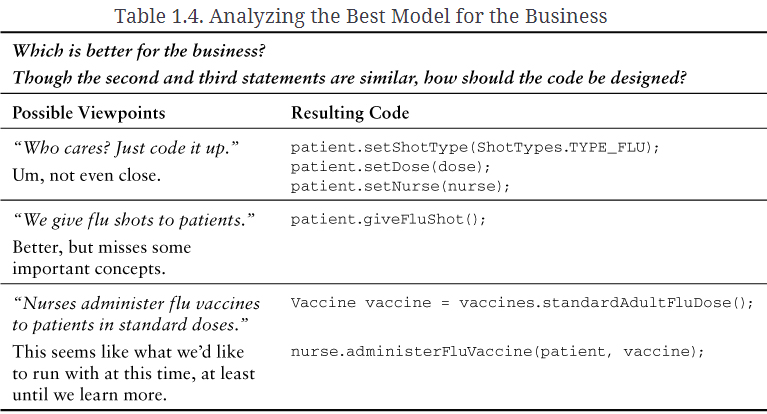

Domain-Driven Design (DDD) views the system from the domain expert perspective. It applies to systems with complex business logic and domain knowledge, e.g. ERP, CRM (Customer relationship management), or Internet companies with rich business. Compared to traditional OO-design, which easily leads to a spider web of objects (“Big Ball of Mud”), DDD introduces “domains” to tide it up. Besides below listed key concepts, IDDD flu vaccine is also a nice example.

-

Domain. A big complex system (enterprise scale) are cut into multiple domains (e.g. user account system, forum system, ecommerce system, etc), each with their specific domain knowledge, language wording, and domain experts.

-

Bounded Context. The boundary of the domain is called the bounded context. The same conceptual object is possible to exist in two different domains, but they map to different classes; e.g. an account in the context of banking is different from an account in book selling. The object can only interact with objects from the same bounded context. And you should not directly operate on getters/setters, instead you use “business workflow calls”. (Similarly in OO design, objects should interact with objects at the same abstraction level.) A domain’s object cannot (directly) go outside of its bounded context. Bounded contexts are orthogonal to each other.

-

Context Map. But how two Bounded Contexts interact? A domain’s object is mapped to another domain, via the Context Map. The Context Map can be as simple as “new an object” and “assign properties”, or as complex as a REST service. Anti-corruption layer (ACL) can be inserted in middle for isolation.

-

Drive DDD design by language. Domain knowledge is a language, and knowledge itself is a good design (if you see the philosophy part). However language has its fuzziness nature, that’s why context needs to be introduced to bound for certainty. Language is fluent when you organize talking at the same abstraction level, which explains why objects should only interact with objects from the same bounded context. DDD is a methodology to operate language into design. It expresses domain knowledge in reusable code, where domain experts are (or close to) code developers.

-

Company strategic view. DDD is able to model company-wide. An executive needs to strategically decide what is core for business competency, what to support it, and what are the common parts. This introduces Core domains, Supporting domains, and Generic domains. Priority resources are biased among them. In long term, the domain knowledge, and the DDD model implemented in running code, are accumulated to become valuable company assets. The DDD architecture focus on lasting domain modeling, where a good design is neutral to the technical architecture being implemented.

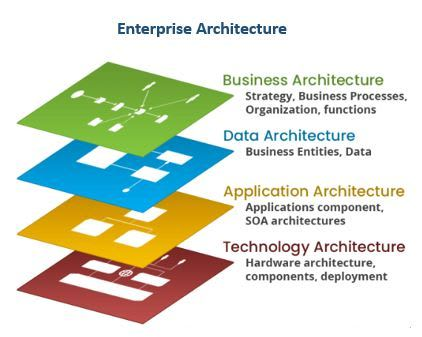

There are more general architecture views more used for customer facing and sales scenarios. They provide alternative insights for what an architecture should include.

-

This Enterprise architecture consists of Business architecture, Data architecture, Application architecture, Technology architecture. This is more viewed from enterprise business level and does a coarse decomposition. It’s shown in the below picture.

-

The 四横三纵 architecture or with more detailed in this Alibaba 四横三纵 article. “四横” are IaaS, DaaS (data as a service), PaaS (platform services) and SaaS. “三纵” are Standard Definition & Documentation (标准规范体系), Security Enforcing (安全保障体系), Operation Support & Safety (运维保障体系).

Besides this section, I also found valuable experiences from Kenneth Lee’s blogs/Kenneth Lee’s articles, the remarkable On Designing and Deploying Internet-Scale Services; and from eBay’s 三高 design P1/eBay’s 三高 design P2 articles, Alibaba’s 道延架构 article, or AWS’s 如何软件开发 article.

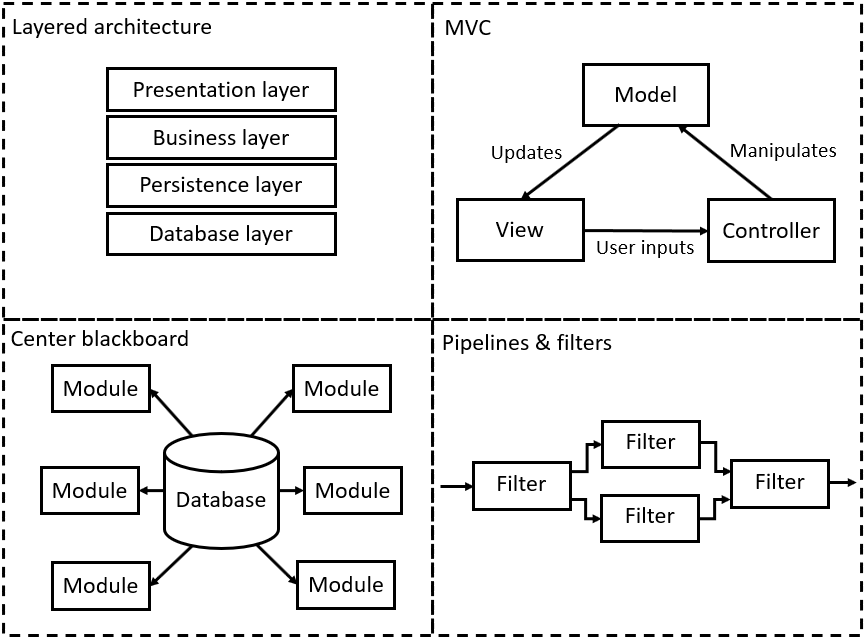

Common architecture styles

This is the old topic, a generic design pattern on the scale of architecture. New recent technologies bring more paradigms, but the essence can be tracked back. Company-wide the architecture may eventually evolve to reflect the organization’s communication structure (Conway’s Law), besides the technical aspects.

-

Layered architecture. Now every architecture cannot totally discard this.

-

Repository/blackboard architecture. All components are built around the central database (the “Repository/blackboard”). They use pull model or get pushed by updates.

-

Main program and subroutines. Typical C-lang program architecture, procedure-oriented programming, and can usually be seen at simple tools. The opposite side is object-oriented programming.

-

Dataflow architecture. Still procedure-oriented programming, it can typically be represented by dataflow diagram. The architecture is useful for data processing, especially chips/FPGA, and image processing. Pipeline and filters are another architecture accompanied with.

-

MVC (Model-view-controller). The fundamental architecture to build UI. It separates data, representation, and business logic. It gets richer variants in richer client UI, e.g. React.

-

Client server. The style that old fashion client device connects to server. Nowadays it’s usually Web, REST API, or SOA instead. But the architecture is still useful in IoT, or as a host agent to report status / receive command to central server, or as a rich client lib to speedup system interactions.

-

The mediator. Suppose N components are connecting to M components, instead of N * M connections, a “mediator” component is introduced in middle to turn it to N + M connections.

-

Event sourcing. User sends command, and every system change is driven by an event. System stores the chain of events as the central truth. Realtime states can be derived from event replay, and speedup by checkpoints. The system naturally supports auditing, and is append-only and immutable.

-

Functional programming. This is more an ideal methodology rather than a concrete architecture. Variables are immutable; system states are instead defined by a chain of function calls. I.e. it’s defined by math formula, or a bit like event sourcing. Functions are thus the first-class citizens.

More recent architectures

More recent architectures below. You can see architectures vary on: How to cut boundaries, e.g. fine-grain levels, offloading to cloud. Natural structures, e.g. layered, event & streaming, business logic, model-view UI. The gravity of complexity, e.g. complex structures, performance, consistency, managing data, security & auditing, loose communication channels.

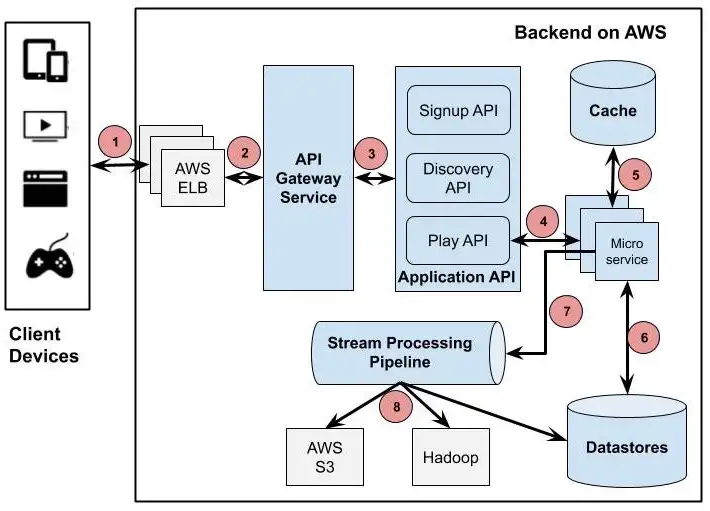

- Microservice. Complex systems are broken into microservices interacting with REST APIs. Typical examples are Kubernetes and Service Mesh. You yet need an even more complex container infrastructure to run microservices: SDN controller and agents for virtual networking, HA load balancer to distribute traffic, circuit breaker to protect from traffic surge, service registry to manage REST endpoints, Paxos quorum to manage locking and consistent metadata, persistent storage to provide disk volumes and database services, … Below is Netflix microservice architecture for example.

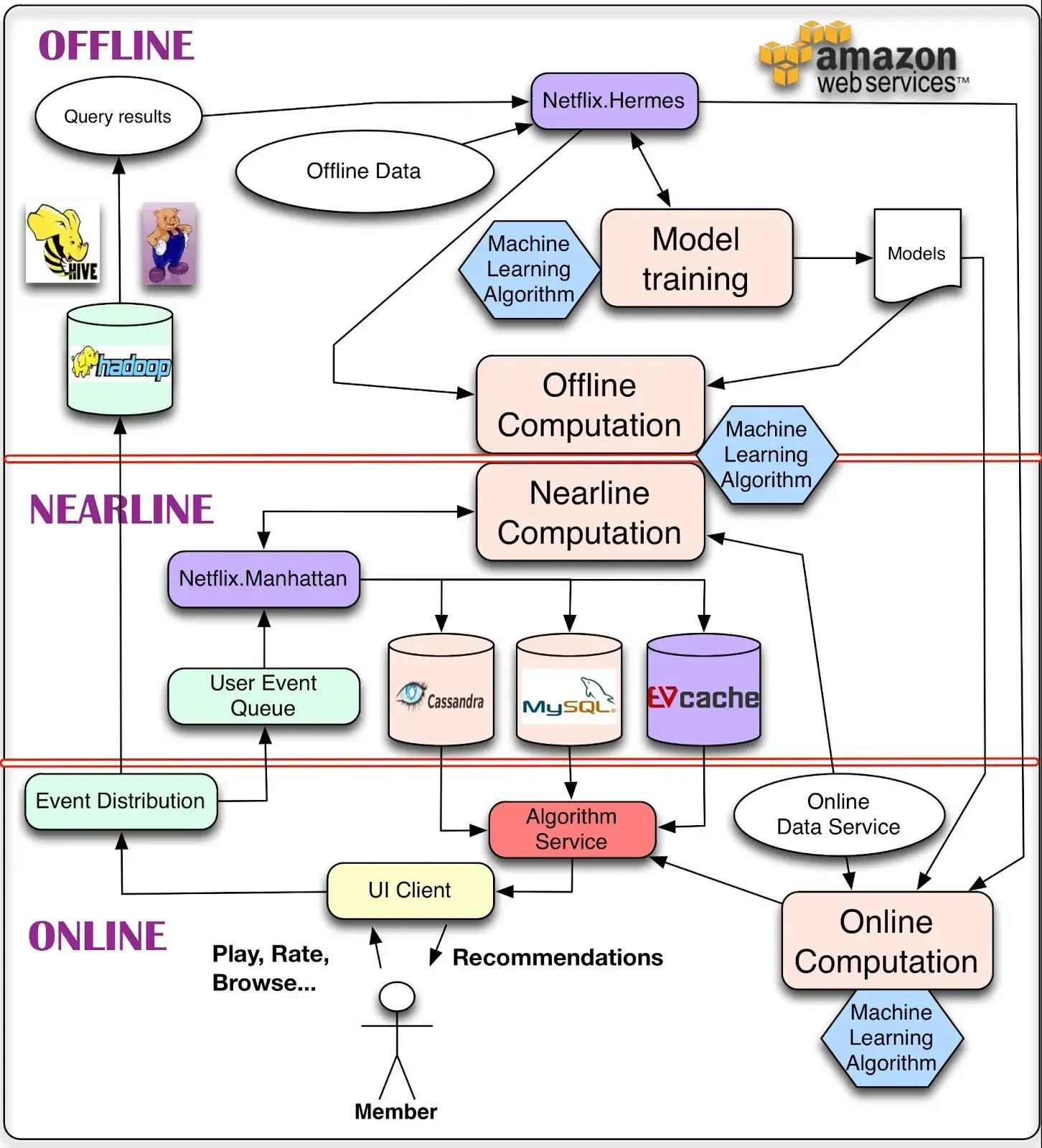

- Stream processing. Upstream and downstream systems, across company-wide, are connected via messaging queue, or low latency streaming platforms. Nowadays enterprises are moving from Lambda architecture (realtime approximate streaming and delayed accurate batching are separated) to Kappa architecture (combine both into streaming, with consistent transaction). A more complex system can comprise online, nearline, offline parts, as in below picture.

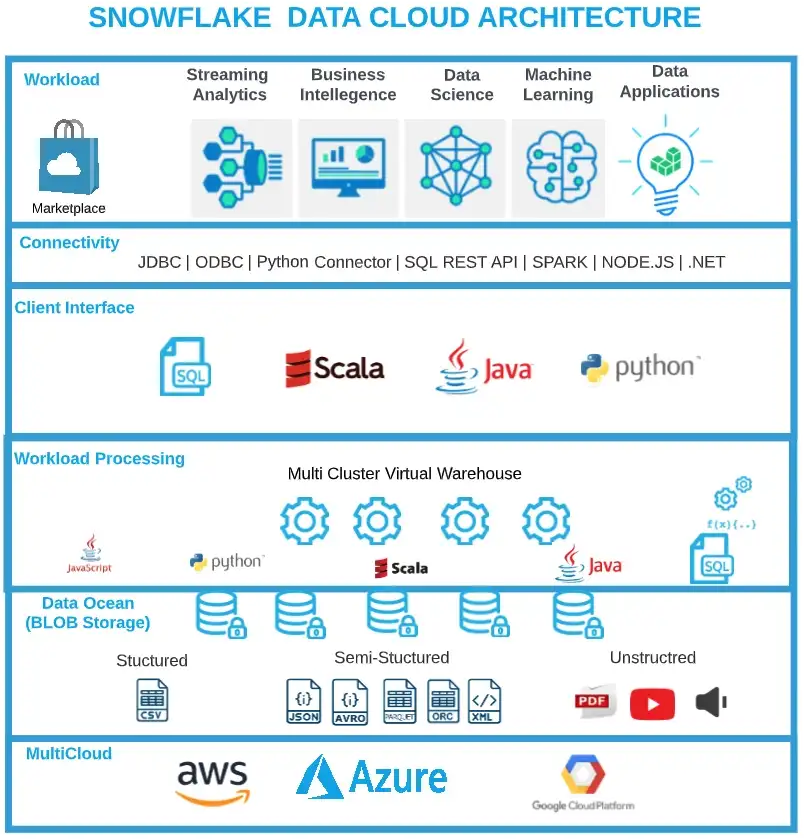

- Cloud native. The system is designed to run exclusively on cloud infrastructure (but to be hybrid cloud). The typical example is Snowflake database. Key designs are: 1) Disk file persistence are offloaded to S3. 2) Memory caching, query processing, storage are disaggregated and can independently scaleout and be elastic for traffic surge. 3) Read path and write path can separately scale, where typical users generate write contents in steady throughput and read traffic in spikes. 4) Different tiers of resources, since fully disaggregated, can accurately charge billing for how much a customer actually uses. Serverless is another topic, where all the heavy parts like database and programming runtime are shifted to cloud. Programmers focus on writing functions to do what business values, lightweighted and elastic to traffic. Below is Snowflake architecture for example.

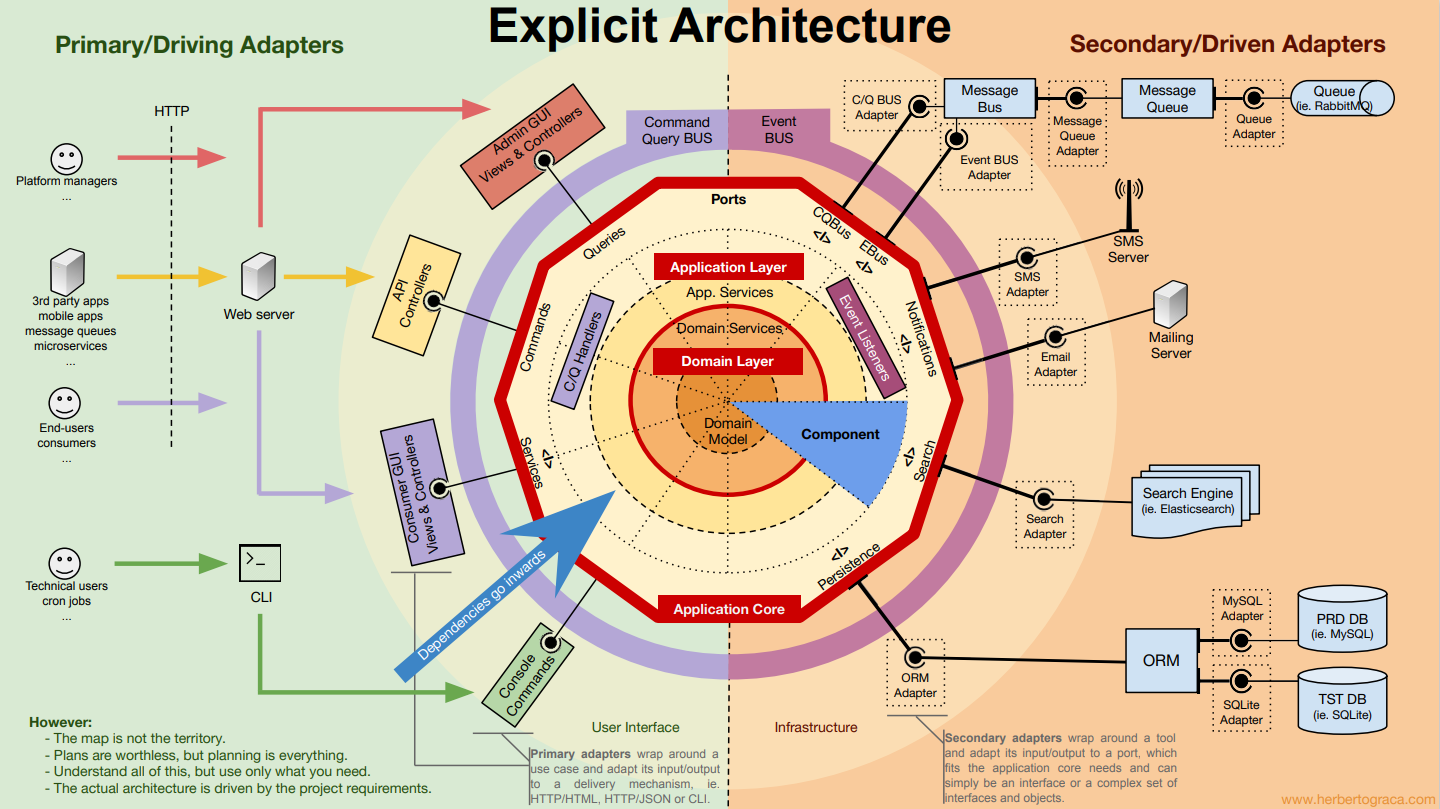

- DDD onion architecture. The onion (or call it hexagon) architecture comes to shape in the context of DDD. Domain model is the central part. The next layer outside are applications. The outer layer are adapters that connects to external systems. Onion architecture is neutral to the actual technical architecture being implemented. Domain models can also be connected to test cases to easily validate business logic (rather than the verbosity of preparing testbed with fake data in databases, fake REST interfaces, etc).

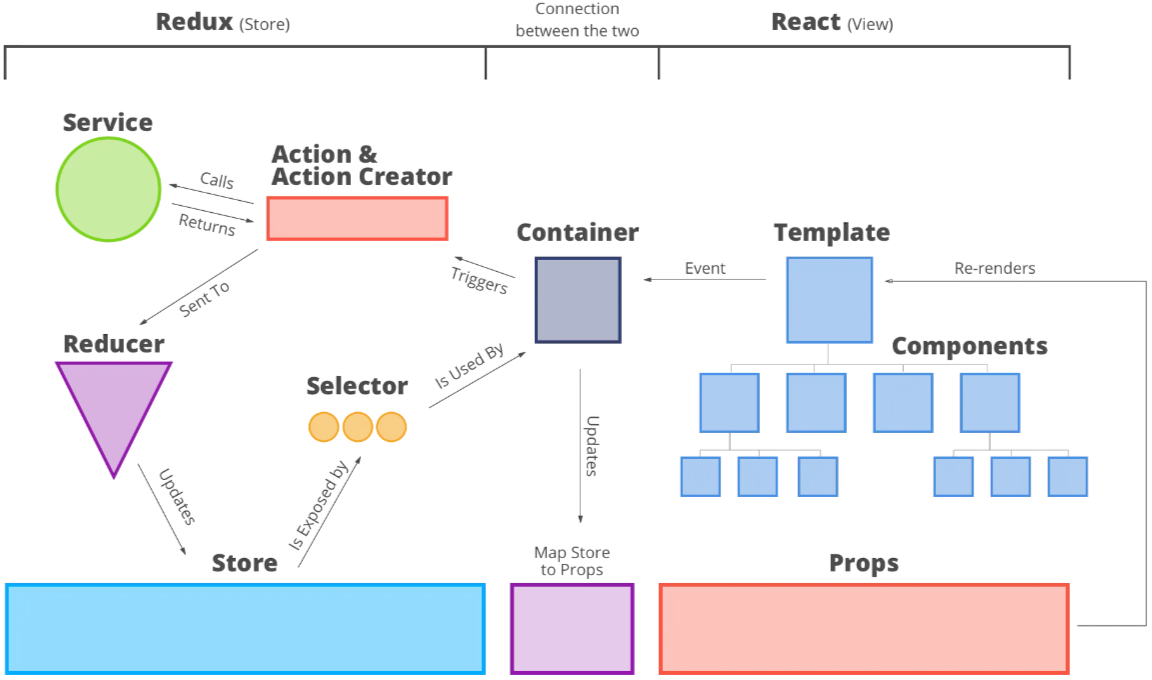

- React-Redux. The architecture is a more advanced version of MVC. With data pulled from server-side, Javascripts at client-side runs MVC itself. Views are constructed by templates + input properties. User actions generate events, which trigger actions, e.g. call services. New updates are sent to reducer, which then map to store. Container uses selectors to fetch states from store, map them to properties, and then finally render the new view. The architecture is also frequently accompanied with Electron and NodeJS to develop rich client Apps with web technologies.

General architecture principles

Architecture level

Most principles are already reflected in the above sections. At architecture level, the most mentioned principles are below three (Architecture 3 principles)

-

Keep it simple. There are enough complexity; simple is precious. Related to KISS.

-

Suitable. Enough for the need, is better than “industrial leading”. An architecture should be suitable, to steer it with your concrete requirement and team resources, rather than to vainly pursuit new technologies. Be frugal. The benefit of a design should be mapped to financial cost to evaluate.

-

Designed for evolving. Business needs are changing. Traffic scale are increasing. Team members may come and go. Technologies are updating. An architecture should be designed evolvable. The architecture process (and development) should be carried out with a growth mindset. An example is Ele.me Payment System, which is quite common for Internet companies.

Component level

More principles come to component level design. CoolShell design principles is good to list all of them. Below are what I think most useful

-

Keep It Simple, Stupid (KISS), You Ain’t Gonna Need It (YAGNI), Don’t Repeat Yourself (DRY), Principle of Least Knowledge, Separation of Concerns (SoC): That said, make everything simple. If you cannot, divide and conquer.

-

Object-oriented S.O.L.I.D. Single Responsibility Principle (SRP), Open/Closed Principle (OCP), Liskov substitution principle (LSP), Interface Segregation Principle (ISP), Dependency Inversion Principle (DIP). Note that though OO principles try to isolate concerns and make changes local, refactoring and maintaining the system in well such state however involves global knowledge of global dependency.

-

Idempotent. Not only API, the system operation should be idempotent when replayed, or reentrantable. A distributed system can commonly lost message and do retry. Idempotent examples can be doing sync (rather than update, sync a command to node, which is consistent after node fail-recovers and re-executes); propagating info in eventual consistency and in one direction; re-executing actions without side effect; goal states commonly used in deployment and config change.

-

Orthogonality. Component behavior is totally isolated from each other. They don’t assume any hidden behaviors from another. They work, no matter what others output. Not only the code path, also the development process can be orthogonal, with a wise cut of components. Orthogonality greatly saves the mind burden, communication cost, and ripple impact of changes.

-

Hollywood Principle, don’t call us, we’ll call you. Component doesn’t

newcomponents. It’s however the Container who manages Component creation and initialization. It’s inversion of control, or dependency injection. Examples are Spring DOI, AspectJ AOP. Dependency should be towards the more stable direction. -

Convention over Configuration(CoC). Properly set default values, save the caller’s effort to always pass in comprehensive configurations. This principle is useful to design opensource libs, e.g. Rails. However, large scale production services may require explicit and tight control on configuration, and the ability to dynamic change. Microsoft SDP is an example.

-

Design by Contract (DbC). A component / class should work by its “naming”, i.e. contract, rather than implementation. A caller should call a component by its “naming”, instead of the effort to look into its internals. The principle maps to objects should work objects at the same abstraction level, and to respect responsibilities.

-

Acyclic Dependencies Principle (ADP). Try not to create a cyclic dependency in your components. Ideally, yes. In fact, cyclic dependency still happens, when multiple sub-systems are broker-ed by a message queue. Essentially, components need interaction, just like people.

Class level

Coming to class level or lower component level, the principles can be found from Coding Styles, Code Refactoring, Code Complete; this article won’t cover. However, it’s interesting to evaluate if a piece of code is good design, which people frequently argue for long time without an agreement. In fact, several distinct design philosophies all apply, which can be found from diverged opensource codebases and programming language designs. To end the arguing, practical principles are

-

Compare concrete benefits/costs to team and daily work, rather than design philosophies.

-

Build the compare on concrete real usecases, rather than blindly forecasting future for design extensibility.

About OO design and Simple & direct

Continued from the above discussion about evaluating a piece of code is good design. The key concern should be whether it saves mind burden across team. There are generally two paradigms: OO design and Simple & direct. They work in different ways.

-

OO design reduces mind burden because good OO design metaphors (e.g. patterns) are shared language across team. The principle fails if they are actually not shared, which should be verified. E.g. one person’s natural modeling may not be another person’s. What code one person feels natural, can become another person’s mind burden. One top team guy can quickly generate code in her natural OO design, but the new code becomes mind burden for others, and slows them down. The condition self-enhances and makes the “top” guy topper. Consistency can be desired, because it extends what’s shared to share.

-

OO design does increase complexity. It introduces more parts from beginning. More interactions becomes hidden and dynamic. A change can refactor more parts to maintain high cohesion low coupling. Things become worse for performance considerations. Decoupling generally hurts performance; it thus needs to introduce more parts to compensate, e.g. caching. More moving parts touched, yet larger scope to maintain for production safety and correctness. Over-design is the next problem behind. OO design essentially works by forecasting future to make changes extensible. However, the forecasting can be frequently wrong, and extra code yet becomes new burden.

-

Simple & direct. Compared to OO design which frequently applies to App level programming, “simple and direct” is more used in system level and data plane programming. The interfaces supported in programming languages, which are the core that OO design relies on, are frequently not capable to capture all information to pass. Examples are performance aspects (cache line, extra calls, memory management, etc), handling worst cases, safety & security concerns, fragile side effects that touch system data structure (if you are programming OS), etc.

- Encapsulation. OO favors encapsulation. But encapsulation doesn’t work well for performance plane. CPU, cache, memory, disk characteristics are at the lowest level. But they still propagate to every layers in software design. Another anti-encapsulation example is debugging & troubleshooting. We have to tear down the fine details of every layer to locate the bug, where encapsulation adds more barriers. But OO is effective to business logic design, which is complex, volatile, and receives less traction from performance.

-

Thus in simple & direct paradigm, people frequently need to read over all code in a workflow, grasp every detail, to make sure it can work correctly. People also need to read over the code to make sure each corner cases is handled, all scenarios are covered, and worst case and graceful degradation is cared. Then, less code less burden to people, everything simple direct transparent is better. Mind burden is reduced this way. However, OO design is making it harder, because subtle aspects to capture are hidden in layers of encapsulation, and linked in dynamic binding, and there are yet more code and more components to introduce.

-

What’s the gravity and traction of the project being developed? Apps with rich and varying logic are willing to adopt OO design. While system level and data plane usually have more stable interfaces and feature sets, but having more traction to performance and safety. Sometime the developer is willing to break every OO rule as long as COGS can be saved. Besides, encapsulation hinders developers from having control on the overall perf numbers and calls.

-

Prioritization. Can the new code go production? Perf under goal, no. Production safty concerns, no. Bad OO design, OK. Thus, the design should first consider perf and safty, and then OO design. However, OO design naturally prioritizes design first, and pushes off goals like performance, worst case handling, to future extension. Besides the priority inversion, extension may turn out hard after the interface is already running on production.

About optimized algorithms and robust design

A similar discussion like “OO design vs Simple & direct” is whether to use the fast low overhead algorithm or a simple & direct solution.

-

Optimized algorithm usually leverages the most of unique workload characteristics. In another word, it carries the most assumptions, and retains least amount of information. Though reduced overhead, it tends to specialize the code too early (Premature Optimization), thus easily breaks up when adding a new feature, slightly changed the problem scope, or considering more input or output. Optimized algorithms more favor areas where problems have little change.

-

Robust design means to tolerate quick volatile feature changes. Simple & direct solution has a nice play, because it carries few assumptions, and retains the information flow through layers. Without tricks, how you describe the solution in plain human language, how it is implemented in code. Performance optimization is left to hotspots located with diagnostic tools.

-

Trade off. Optimized algorithms and robust design have their fundamental conflicts. As a balanced trade off, usually optimized algorithms localize into smaller and specialized scopes, while robust design expands to the most parts of the system. Though the former attracts people as being “core”, it implies smaller adoptable scope, more likely to be replaced by reuse, and less chance of cross domain intersection.

About analytical skills in designing

Proposing a reasonable design solution and the right decision making require analytical skills. Some can be learned from the Consultant area. They help dissect complex problems and navigate through seemingly endless arguments.

Problem to solve

Finding the right problem to solve and to define the problem are non-trivial.

-

Firstly, there should be the base solution to compare with the proposed solution.

-

Secondly, measure the size of problem in a data driven way, e.g. X% data are affected with Y% cost. Match the problem size with the worth of effort to solve it.

-

Thirdly, PONs/CONs should trace back to fundamental pillars like COGS saving, less dev effort, new user features, user SLA improvement, etc. Multi-dimension trade offs are translated to market money for compare. They are the criteria for decision making.

-

Avoid vague words like something is “better”, “smarter”, “more efficient”, “easier”, “new technology”, “too slow”, “too complex”, etc.

Linear thinking

Smart people like jumping thoughts, but decision making requires linear thinking.

-

Start from a base solution that is simple & direct, and then move step by step to the proposed solution. The key is to identify hidden jumps. Every jump must be justified.

-

Then, ask 1) Why choose to move this step (Problem to solve); 2) What new assumptions are added to support the move; 3) What in information flow are lost or distorted through the step.

-

In a systematical way, these questions are to identify all missing paths. Eventually they compose an MECE analysis tree, to ensure the full spectrum of potential solutions (Design space) are explored. Data driven approach then leads to the best and must-be one.

-

These questions also help identify potential trade offs. Hidden assumptions and distorted information flow are what make adding new feature harder. They also make it easier to introduce bugs.

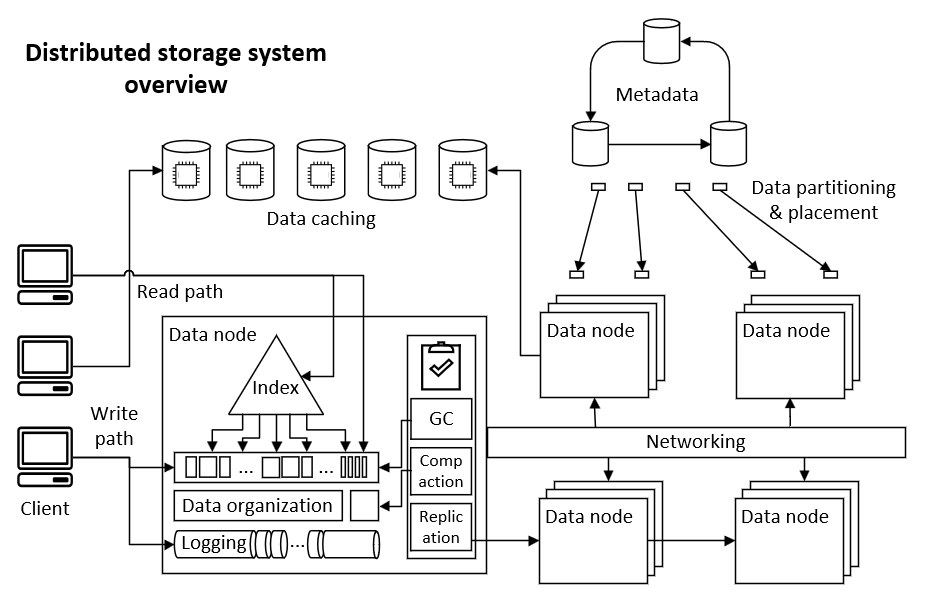

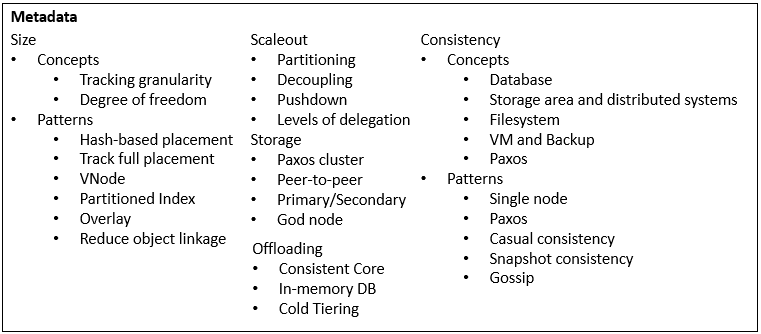

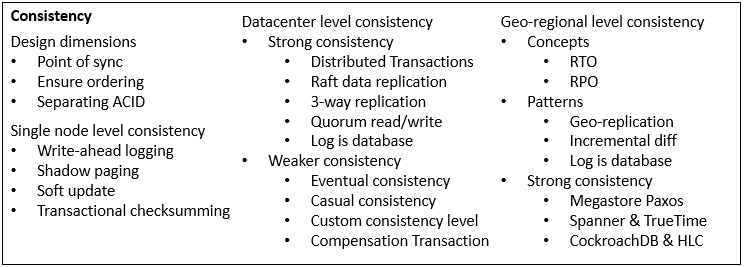

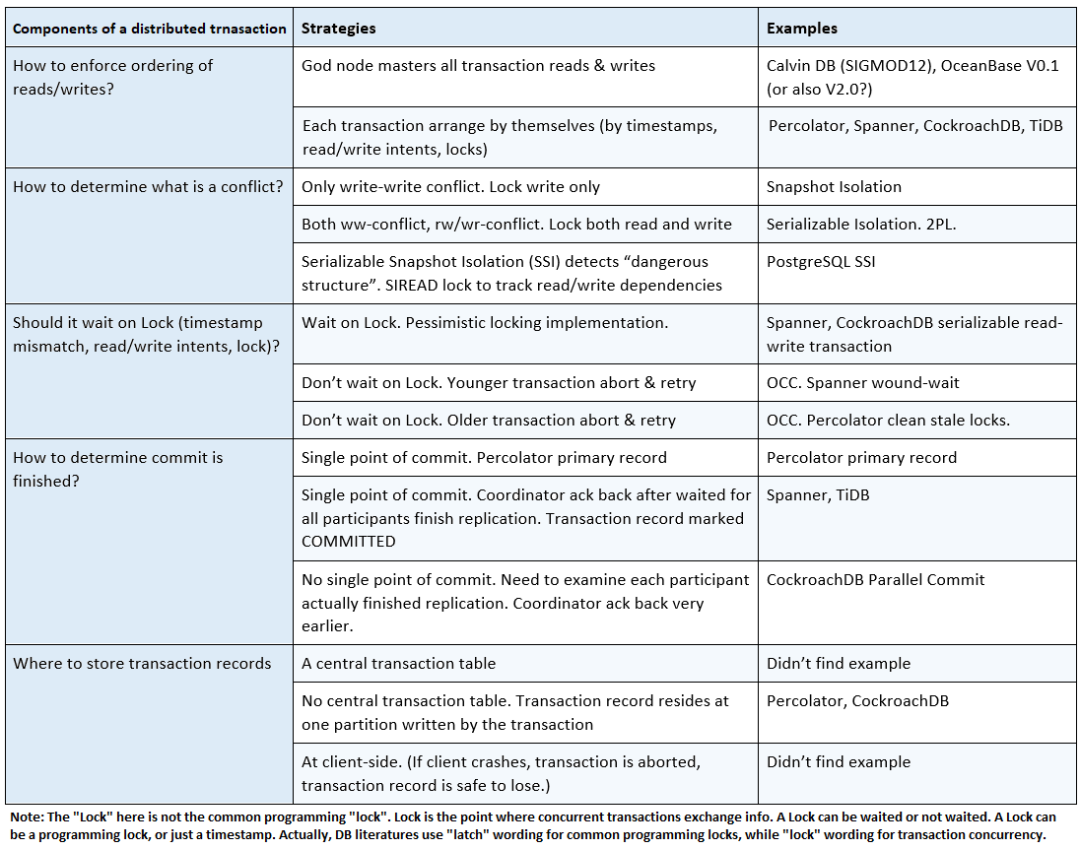

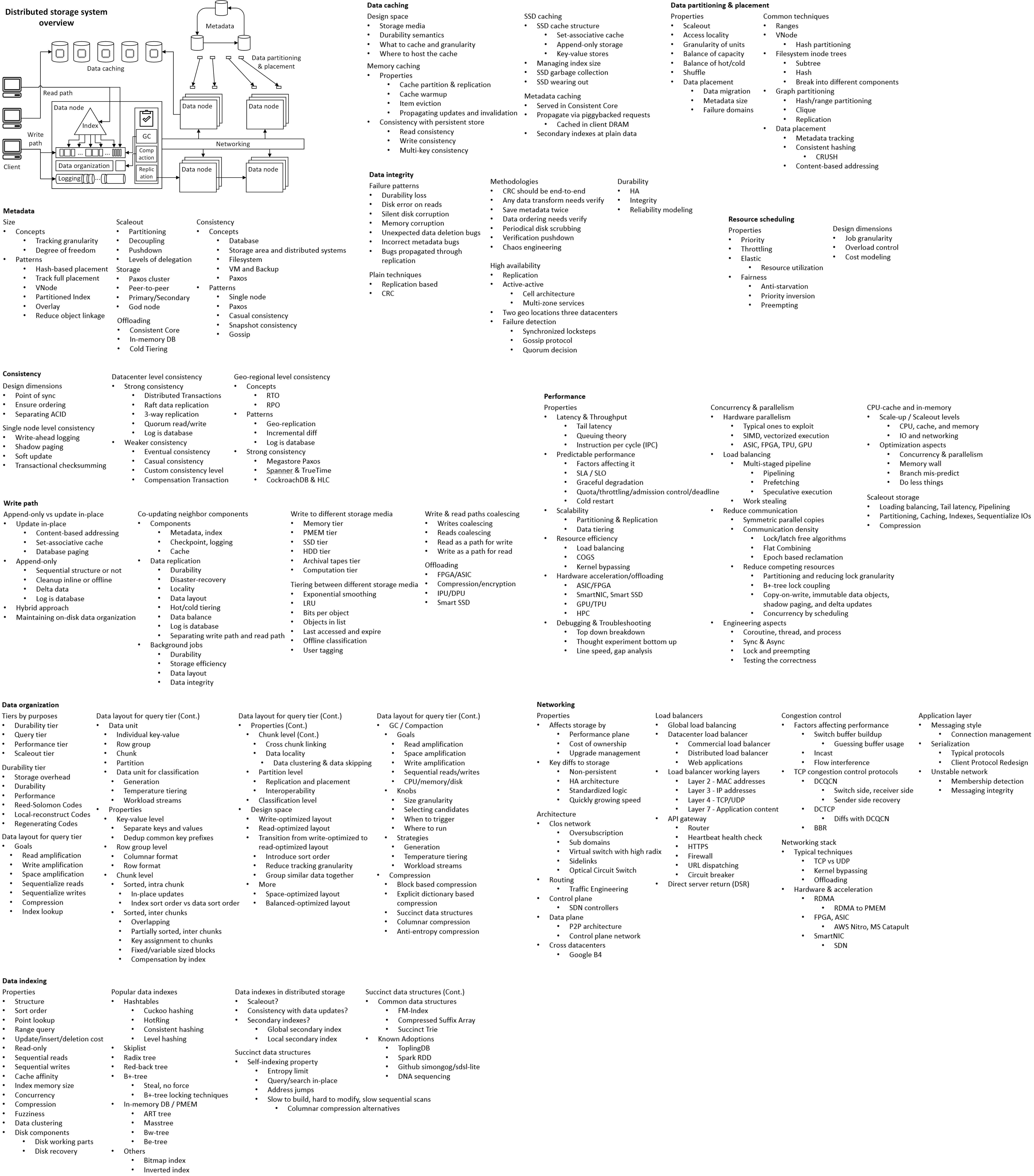

Technology design spaces - Overview

Software architecture has common system properties, e.g. CAP. To achieve them, different techniques are invented and evolve into more general architecture design patterns. Plotting them on the map of various driving factors, they reveal the landscape of technology design space, that we explore and navigate for building new systems. I’ll focus on distributed storage.

Sources to learn from

Articles, books, and courses teach design patterns and outlines the design spaces

-

MartinFowler site Patterns of Distributed Systems. With the adoption of cloud, patterns like Consistent Core and Replicated Log are gaining popularity. Besides this article, Service Registry, Sidecar, Circuit Breaker, Shared Nothing are also popular patterns.

-

Cloud Design Patterns from Azure Doc also summarizes common cloud native App design patterns. They are explained in detail, and fill the missing ones from above.

-

Book Designing Data-Intensive Applications shows the challenges, solutions and techniques in distributed systems. They map to design patterns and combine into design space.

-

Courses CMU 15-721 outlines key components in database design, e.g. MVCC, data compression, query scheduling, join. The breakdown reveals the design space to explore. The attached papers future tours established design patterns in depth. Highly valuable.

-

On Designing and Deploying Internet-Scale Services. The article is comprehensive, in-depth, and covers every aspect of best practices for building Internet scale services. Highly valuable. It reminds me of SteveY’s comments

Recognized opensource and industry systems become the Reference architectures, which to learn prevalent techniques or design patterns. I listed what I recall quickly (can be incomplete). Reference architectures can be found by searching top products, comparing vendor alternatives, or from cornerstone papers with high reference.

- Due to the lengthy content, I list them in the next Reference architectures section.

Related works section in generous papers are useful to compare contemporary works and reveal the design space. For example,

- TiDB paper and Greenplum paper related works show how competing market products support HTAP (Hybrid Transactional and Analytical Processing) from either prior OLTP or OLAP. They also reveal the techniques employed and the Pros/Cons.

Good papers and surveys can enlighten the technology landscape and reveal design space in remarkable depth and breadth

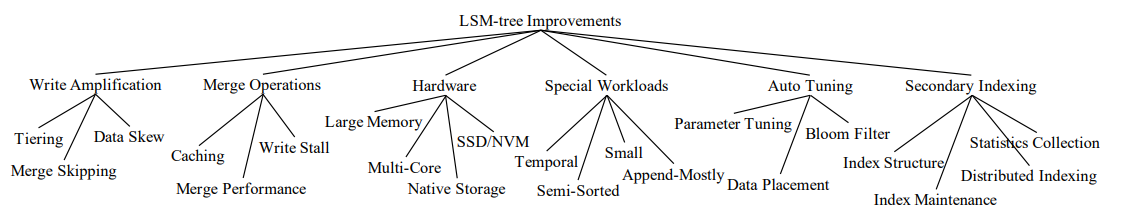

- LSM-based Storage Techniques: A Survey (Zhihu) investigated full bibliography of techniques used to optimize LSM-trees, and organized the very useful taxonomy.

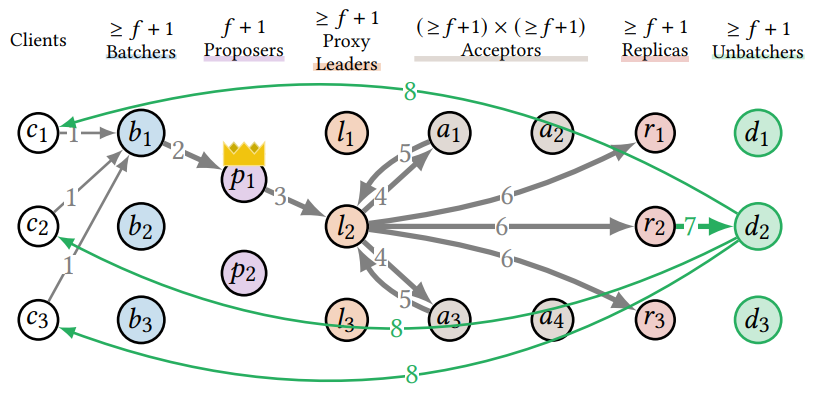

- Scaling Replicated State Machines with Compartmentalization shows a group of techniques to decouple Paxos components and optimize the throughput.

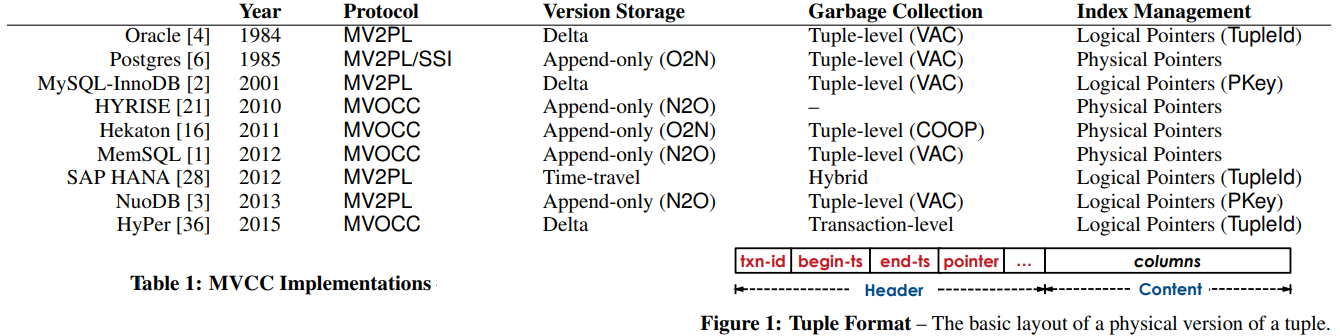

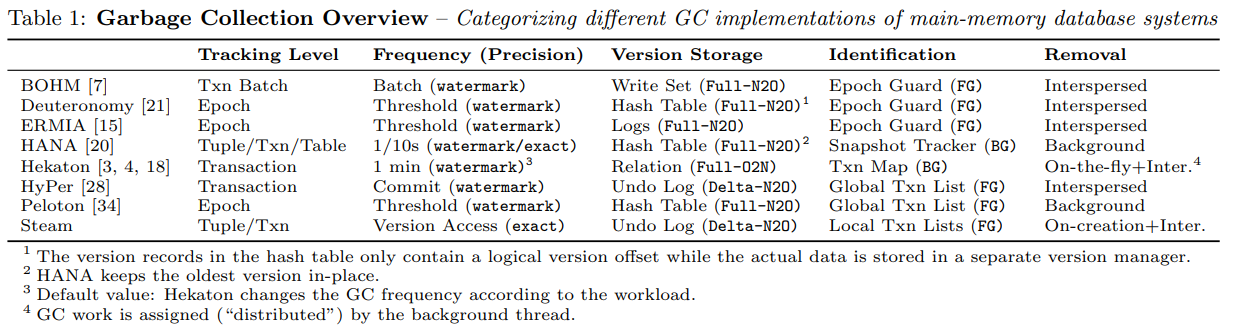

- An Empirical Evaluation of In-Memory Multi-Version Concurrency Control compared how main-stream databases implement MVCC with varieties, extracted the common MVCC components, and discussed main techniques. It’s also useful guide to understand MVCC.

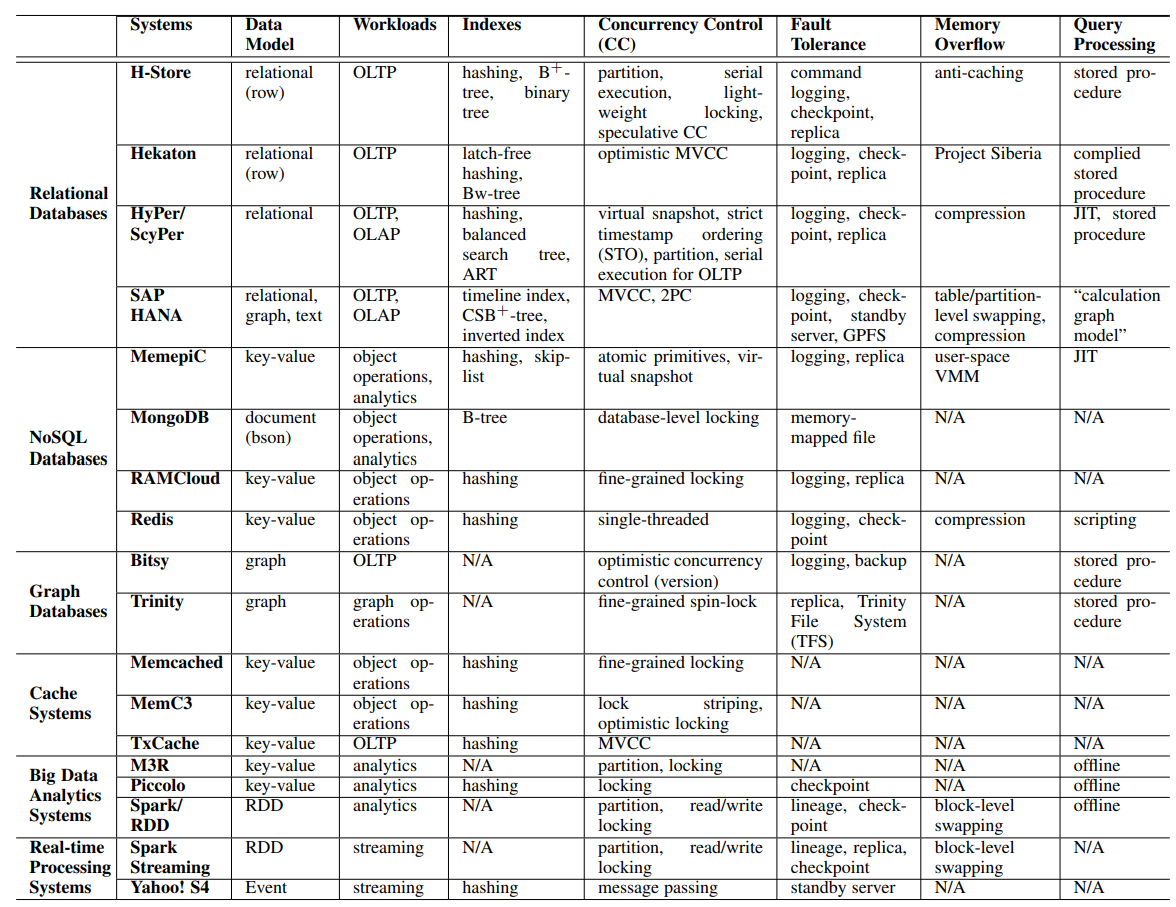

- In-Memory Big Data Management and Processing surveyed how main-stream in-memory databases and designed, compared their key techniques, that form the design space.

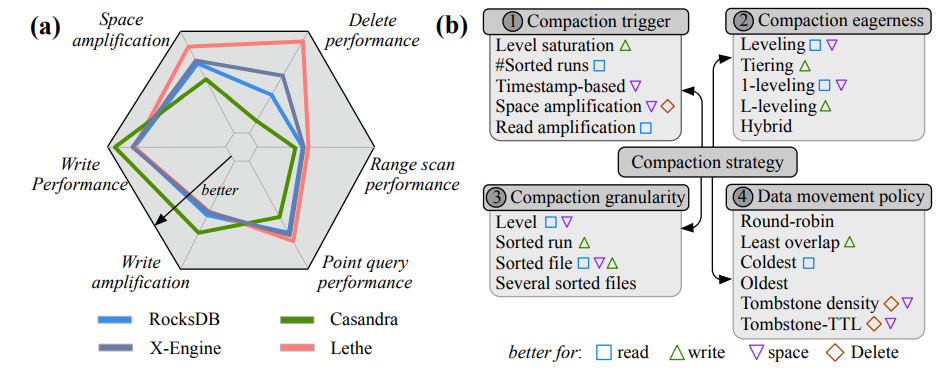

- Constructing and Analyzing the LSM Compaction Design Space compared different compaction strategies in LSM-tree based storage engines. THere are more fine-grained tables inside the paper.

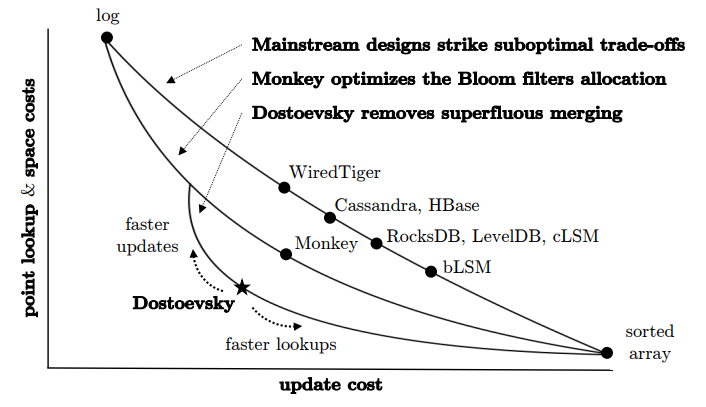

- Another Dostoevsky: Better Space-Time Trade-Offs for LSM-Tree also plots the design space for space-time trade-offs among updates, point lookups, range lookups.

-

Latch-free Synchronization in Database Systems compared common lock/lock-free techniques, e.g. CAS, TATAS, xchgq, pthread, MCS, against different concurrency levels. It reveals the choice space while implementing effective B+-tree locking techniques.

-

Optimal Column Layout for Hybrid Workloads models CRUD, point/range query, random/sequential read/write cost functions on how blocks are partitioned by partition size. It helps find the optimal block physical layout.

-

Access Path Selection in Main-Memory Optimized Data Systems models query cost using full scan vs B+-tree at different result selectivity and query sharing concurrency. The cost model shows how query optimizer choose physical plans.

Reference architectures in storage areas

(Continued from the previous Sources to learn from section.)

Cache

-

Redis is the opensource de-facto in-memory cache used in most Internet companies. Compared to Memcached, it supports rich data structures. It adds checkpoint and per operation logging for durability. Data can be shared to a cluster of primary nodes, then replicated to secondary nodes. Tendis further improves cold tiering, and optimizations.

-

Kangaroo cache (from long thread of Facebook work on Scaling Memcached, CacheLib, and RAMP-TAO cache consistency) features in in-memory cache with cold tier to flash. Big objects, small objects are separated. Small objects combines append-only logging and set-associative caching to achieve the optimal DRAM index size vs write amplification. Kangaroo also uses “partitioned index” to further reduce KLog’s memory index size.

-

BCache is a popular SSD block cache used in Ceph. Data is allocated in “extents” (like filesystem), and then organized to bigger buckets. Extent is the unit of compression. A bucket is sequentially appended to full and is the unit of GC reclaim. Values are indexed by B+-tree (unlike KLog in Kangaroo using hashtables). The B+-tree uses large 256KB nodes. Node internal is modified by appending log structured. B+-tree structural change is done by COW and may recursively rewrite every node up to the root. Journaling is not a necessity because of COW, but used as an optimization to batch and sequentialize small updates.

(Distributed) Filesystem

-

BtrFS for Linux single node filesystem. It indexes inodes with B-tree, updates with copy-on-write (COW), ensures atomicity with shadow paging. Other contemporaries include XFS, which also indexes by B-tree buts updates with overwrite; and EXT4, which is the default Linux filesystem that directory inode is a tree index to file inodes, and employs write-ahead journaling (WAL) to ensure update (overwrite) atomicity.

-

CephFS introduces MDS to serve filesystem metadata, i.e. directories, inodes, caches; while persistence is backed by object storage data pool and metadata pool. It features in dynamic subtree partitioning and Mantle load balancing. Cross-partition transaction is done by MDS journaling. MDS acquires locks before update.

-

HopsFS builds distributed filesystem on HDFS. Namenode becomes a quorum, stateless where metadata is offloaded to another in-memory NewSQL database. Inodes are organized into entity-relation table, and partitioned to reduce servers touched by an operation. Cross-partition transaction, e.g. rename, rmdir, are backed by the NewSQL database, with hierarchical locking. Subtree operations are optimized to run parallel.

-

HDFS is the distributed filesystem for big data. It relaxes POSIX protocol, favors large files, and runs primary/back Namenode to serialize transactions. HDFS was initially the opensource version of Google Filesystem (which started the cloud age with Big Table, Chubby), then went so successful, that has become the de-facto shared protocol for big data filesystems, databases (e.g. HBase), SQL (e.g. Hive), stream processing (e.g. Spark), datalakes (e.g. Hudi) for both opensource and commercial (e.g. Isilon) products.

Object/Block Storage

-

Ceph for distributed block storage and object storage (and CephFS for distributed filesystem). Ceph made opensource scaleout storage possible, and dominated (Ubuntu Openstack storage survey) in OpenStack ecosystem. It features in CRUSH map to save metadata by hash-based placement. It converges all object/block/file serving in one system. Node metadata is managed by a Paxos quorum (Consistent Core) to achieve all CAP. Ceph stripes objects and update in-place, which yet introduced single node transaction. Ceph later built BlueStore that customized (Ceph 10 year lessons) filesystem, optimized for SSD, and solved the double-write problem. The double-write issues is solved by separating metadata (delegated to RocksDB), and key/value data (like Wisckey); and big writes become append-only, small overwrites are merged to WAL (write-ahead logging).

-

Azure Storage for industry level public cloud storage infrastructure. It is built on Stream layer, which a distributed append-only filesystem; and uses Table layer, which implements scaleout table schema, to support VM disk pages, object storage, message queue. Append-only simplifies update management but gets more challenge in Garbage Collection (GC). The contemporary AWS S3 seems instead follows Dynamo, that is update in-place and shards by consistent hashing. For converging object/block/file storage, Nutanix shares similar thought to run storage and VM on one node (unlike remotely attached SAN/NAS).

-

Tectonic is similar with Azure Storage. It hash partitions metadata to scaleout. It employs Copyset Placement. It consolidates Facebook Haystack/F4 (Object storage) and Data Warehouse, and introduced much multitenancy and resource throttling. Another feature of Tectonic is to decouple common background jobs, e.g. data repair, migration, GC, node health, from metadata store, into background services. TiDB shares similar thought if would have moved Placement Driver out of metadata server.

-

XtremIO to build full-flash block storage array with an innovative content-based addressing. The data placement is decided by content hash, thus deduplication is naturally supported. Though accesses are randomized, they run on flash. Write is acked after two copies in memory. Other contemporaries include SolidFire, which is also scaleout; and Pure Storage, which is scale-up and uses a dual-controller sharing disks.

Data deduplication

-

Data Domain builds one of the most famous data deduplication appliance. It recognizes middle-file inserts by rolling hash variable-length chunking. Fingerprint caching is made efficient via Locality Preserved Caching, which works perfectly with backup workload.

-

Ceph dedup builds the scalable dedup engine on Ceph. Ceph stores deduplicated chunks, keyed by hash fingerprint. A new metadata pool is introduced to lookup object id to chunk map. Dedup process is offline with throttling. The two level indirection pattern can also be used to implement merging small files to large chunk.

Archival storage

-

Pelican is the rack-scale archival storage (or called cold storage, near-line storage), co-designed with hardware, to reduce disk/cpu/cooling power by only 8% of total disks are spinning. Data is erasure coded and striped across disk groups. Flamingo continues research from Pelican. It generates best data layout and IO scheduler config per Pelican environment setup. Archival storage gains adoption from government compliance needs, and with AWS Glacier.

-

Pergamum co-designs hardware, as an appliance, to keep 95% disks power-off all time. NVRAM is added per node, holding signatures and metadata, to allow verification without wake up disk. Data is erasure coded intra and inter disks. Note Tape Library is still attractive archival storage media due to improvement on cost per capacity, reliability, and throughput.

OLTP/OLAP database

-

CockroachDB builds the cross-regional SQL database that enables serializable ACID, an opensource version of Google Spanner. It overcomes TrueTime dependency by instead use Hybrid-Logical Clock (HLC). CockroachDB maps SQL schema to key-value pairs (KV) and stores in RocksDB. It uses Raft to replicate partition data. It built novel Write Pipelining and Parallel Commit to speedup transactions. Another contemporary is YugabyteDB, which reuses PostgreSQL for query layer and replaced RocksDB with DocDB, and had an interesting debate with CockroachDB (YugabyteDB challenges CockroachDB, Zhihu YugabyteDB/CockroachDB debate, CockroachDB rebuts YugabyteDB).

-

TiDB is similar with CockroachDB. It focus on single region and serializes with timestamp oracle server. It implements transaction following Percolator. TiDB moved a step further to combine OLTP/OLAP (i.e. HTAP) by Raft replicating an extra columnar replica (TiFlash) from the baseline row format data. In contemporaries (Greenplum’s related works) to support both OLTP/OLAP, besides HyPer/MemSQL/Greenplum, Oracle Exadata (OLTP) improves OLAP performance by introducing NVMe flash, RDMA, and added in-memory columnar cache; AWS Aurora (OLTP) offloads OLAP to parallel processing on cloud; F1 Lightning replicas data from OLTP database (Spanner, F1 DB) and converts them into columnar format for OLAP, with snapshot consistency.

-

OceanBase is a distributed SQL database, MySQL-compatible, and supports both OLTP/OLAP with hybrid row-column data layout. It uses a central controller (Paxos replicated) to serialize distributed transaction. The contemporary X-Engine is an MySQL-compatible LSM-tree storage engine, used by PolarDB. X-Engine uses FPGA to do compaction. Read/write paths are separated to tackle with traffic surge. X-Engine also introduced Multi-staged Pipeline where tasks are broken small, executed async, and pipelined, which resembles SeaStar. PolarDB features in pushing down queries to Smart SSD (Smart SSD paper) which computes within disk box to reduce filter output. Later PolarDB Serverless moved to disaggregated cloud native architecture like Snowflake.

-

AnalyticDB is Alibaba’s OLAP database. It stores data on shared Pangu (HDFS++), and schedules jobs via Fuxi (YARN++). Data is organized in hybrid row-column data layout (columnar in row groups). Write nodes and read nodes are separated to scale independently. Updates are first appended as incremental delta, and then merged and build index on all columns off the write path. The baseline + incremental resembles Lambda architecture.

-

ClickHouse is a recent OLAP database quickly gaining popularity known as “very fast” (why ClickHouse is fast). Besides common columnar format, vectorized query execution, data compression, ClickHouse made fast by “attention to low-level details”. ClickHouse supports various indexes (besides full scan). It absorbs updates via MergeTree (similar to LSM-tree). It doesn’t support (full) transaction due to OLAP scenario.

-

AWS Redshift is the new generation cloud native data warehouse based on PostgreSQL. Data is persisted at S3, while cached at local SSD (which is like Snowflake). Query processing nodes are accelerated by AWS Nitro ASIC. It is equipped with modern DB features like code generation and vectorized SIMD scan, external compilation cache, AZ64 encoding, Serial Safe Net (SSN) transaction MVCC, Machine Learning backed auto tuning, semi-structured query, and federated query to datalake and OLTP systems, etc.

-

Log is database 1 / Log is database 2 / Log is database 3. The philosophy was first seen on AWS Aurora Multi-master. Logs are replicated as the single source of truth, rather than sync pages. Page server is treated a cache that replays logs. In parallel, CORFU, Delos builds the distributed shared log as a service. Helios Indexing, FoundationDB, HyderDB build database atop shared logging.

In-memory database

-

HyPer in-memory database has many recognized publications. It pioneers vectorized query execution with code generation, where LLVM is commonly used to compile IR (intermediate representation); and features in Morsel-driven execution scheduling,