Study on Regenerating Code

Background

Erasure-coding is widely used in storage to improve reliability and to reduce storage overhead, compared to the classic 3-replica approach. “Erasure” means the data is totally lost. This is in contrast with error correction techniques, in which we have data but some bits in it is incorrect. Note that besides storing data, erasure-coding related technologies can also be used in network coding such as how to transimit data robustly.

The most commonly used erasure-coding is Reed-Solomon (RS) code. Note that all the computation is on Galois Fields. See An Introduction to Galois Fields and Reed-Solomon Coding. In a word, RS code is linear combination of each data symbols, and in which generator matrix has every submatrix invertible. Galois Fields is generated by a seriers of polynomial operations, but what coding theory actually needs is only a finite field (so that we can represent it in several bytes), which supports all math operations (so that we can calculate), and the major math operation should be easy for computer (e.g. add is XOR).

The RS code is a MDS (maximumal distance separable) code. Actually it is the most classic, simple, and straightforward one. MDS means an (n, k) code is able to recover any k-loss. Each vector in codeword space has at least n-k+1 elements different, so that after n-k losses they are still differentiable and we can revert them back to original data. See Coding Techniques for Repairability in Networked Distributed Storage Systems (Frederique Oggier) chapter 3. The longsurvey also gives important notations widely used in coding theory papers, such as what does (n, k) code means, code generator matrix, parity check matrix, etc.

There are variants of RS code, for example the Local Reconstruction Codes (LRC), which recoverability for reconstruct latency by defining local groups. There are also array codes, in which each node stores an array of symbols rather than one symbol (as in RS). Also, there are codes that only relies on XOR operation, rather than Galois Field arithmetic which is much slower and consumes more CPU. Here’s a brief intro: Erasure Codes for Storage Systems: A Brief Primer (the brief intro also covers regenerating code).

Besides, there’s a Erasure Coding for Distributed Storage Wiki listing bibliography for all sorts of erasure-coding papers including regenerating code. The site is recently somewhat inaccessible. Here’s an archival copy.

Beginning of Regenerating code

The paper Network Coding for Distributed Storage Systems first proposed regenerating code. RS code has bandwidth expansion problem when recovering a failed node. It transmits k * (user data size / k) = user data size, to recover one failure node, which holds (user data size / k) size of data. Regenerating code is proposed to reduce the transmitted bandwidth. The basic approach is that, each node stores an array of symbols rather than 1 symbol in RS. And before transimitting it out, the node do a computation on the array of symbols, and only transmit out the results, so that less size is transmitted out.

1. Network Coding for Distributed Storage Systems [2010, 1353 refs]

http://users.ece.utexas.edu/~dimakis/RC_Journal.pdf

1. very good to read. this is the founding paper of regenerating code

finally I worked out the math the paper is saying.

2. highlights

1. by representing erasure code as a network information flow,

and inspecting min-cut should allow at least M data to flow through

the paper calculates the minimum bounds of repair bandwidth

1. and using the network information flow multicasting theories

2. the appendix part is good. it is the proof of how the bound is obtained

1. the bound is obtained by Fig. 7. G*, which is a sub-graph which can be extracted from any condition of graph G

2. if we could find a larger G* bound (14), we can even calculate a smaller α and β

2. in first case, we don't expand storage data per node, and try minimize

the repair bandwidth. this gives us the MSR code

1. MSR code can almost easily use 0.55x bandwith than plain Reed-Solomn code

or even less if d is increased.

3. in second case, we allow to expand storage data size per node, and this

gives us even lower bound of repair bandwidth. the lowest one is MBR code

4. this paper has been comparing itself with other code schemas

1. replication, ideal erasure codes, hybrid, MSR, MBR

2. hybrid: use 1 full replica and EC codes together. as long as the full replica

is here, we can reduce EC storage repair bandwidth by just copying from the

full replica.

3. others

1. the related works part has good introduction on what works and directions are

in stoarge coding part. and the evaluation and analysis works on it.

The paper “Network Coding for Distributed Storage Systems” uses analysis techniques from network coding theory. It contructs the information flow graph for the code recovering process, and uses max-flow min-cut theorem to duduce the lowest bounds of how much bandwidth needs to be transmitted to recover a failed node.

-

MSR (minimum-storage regenerating) code is where storage space overhead is the same with RS code, but recovery bandwidth is at lowest bound. The recovery bandwidth can be ~0.5x, or with longer code (e.g. 9+9) it can be ~0.2x, compared to what RS code needs.

-

MBR (minimum-bandwidth regenerating) code is where storage space is allowed to expand, but recovery bandwidth is at lowest bound. The recovery bandwidth can be even lower, to ~0.1x, or still less.

There are following papers referencing this paper and give easier-to-understand versions of MSR and MBR bounds. Note that MSR and MBR code can also do MDS recovery, i.e. recover all data from any k nodes (n nodes). So, they have two recovery mode, one is regenerating, another is MDS recovery.

Constructing Regenerating Codes

I have collected ranges of regenerating code implementations for different scenarios. I will list them in this section. Usually, regenerating code yields much less bandwidth overhead when doing recovery. But in general they share several drawbacks

- Computational overhead. Regenerating code usually requires solving more matrix inversion and matrix multiplies when doing recovery, compared to RS code. And the matrix size may be bigger, due to d (count of helper nodes connected by the replacement nodes to do recovery) is usually bigger than k (how many helper nodes RS code needs).

- Besides, RS code has many optimized implementation accumulated by years, while regenerating code may not be able to apply them all and it is relatively new.

- But, although matrix operation overhead is higher. Both RS code and regenerating code need to repeatedly apply matrixes to translate bytes. The later time are same, if which dominates, the computational overhead becomes almost same.

- For MSR code, d<2k-3 is impossible, see paper Optimal Exact-Regenerating Codes for DistributedStorage at the MSR and MBR Points via a Product-Matrix Construction. So the implications are

- MSR code only applies to code with high paritiy count, i.e. storage overhead is almost 2x. But, this doesn’t mean we cannot construct a code that is not at MSR point but still very practically useful.

- Compared to RS code, d is usually much larger than k. That means

- The replacement node has to connect to more helper nodes to recover. Although total bandwidth transimitted is lower, but the total IO count is high.

- RS code can recover n-k losses at most. But regenerating code, due to d is large, can usually recover when there is 1 or 2 nodes are lost. With more nodes lost, MDS recovery is needed. This makes regenerating recovery applies to only limited node failure cases.

Optimal MSR Code by Product-Matrix

Paper Optimal Exact-Regenerating Codes for DistributedStorage at the MSR and MBR Points via a Product-Matrix Construction gives the construct of the optimal exact-regenerating MSR code. It is constructed by the product-matrix framework. Or say, the paper gives a good matrix representation for regenerating codes.

1. Optimal Exact-Regenerating Codes for Distributed Storage at the MSR and MBR Points via a Product-Matrix Construction [2011, 434 refs]

https://people.eecs.berkeley.edu/~rashmikv/papers/product_matrix_codes.pdf

1. key highlights

1. This paper shows the way to construct MBR regenerating code matrix, for any [n, k, d]. good to read, it's not complex

2. it is impossible to construct linear MSR codes for the case d < 2k - 3 of β = 1 when (see [6], [14])

This paper also gives the method

3. compared to "Explicit constructions of high-rate MDS array codes with optimal repair bandwidth [2016, 18 refs]"

the later one gives a practical way to construct row-wise MDS regenerating code to cut bandwidth to 55% without storage overhead increased.

2. for detailed math, see the paper.

The advantages are that the code is at MSR point (for a 9+9 code it can save recovery bandwidth to ~22% of RS code), it is systematic code (i.e. first k nodes contain original user data, so read can be fast), do exact-regenerating (i.e. the recovered node has the same data with the lost one), and it has optimal parameters, i.e. it works for any d >= 2k-2. The math of regenerating recovery is simple, but the MDS recovery has more steps.

The drawback sides of the MSR code are, more computational overhead. Take a (n=18, k=9) code with d=16 as example. For regenerating recovery, it needs to solve a dd matrix inversion, while RS code only needs kk. For MDS recovery, it needs to solve k of (k-1)(k-1) matrix inversions and with several other operations, while MDS needs only one kk matrix inversion. Also, due to the d>=2k-2 limit, this MDS construct only applies to long code, i.e. parity count is almost the same as data fragment count, i.e. ~2x storage overhead at least.

Optimal MBR Code by Product-Matrix

Paper Optimal Exact-Regenerating Codes for DistributedStorage at the MSR and MBR Points via a Product-Matrix Construction also gives the MBR code construct. I think the MBR one is a better work. It is a code at MBR optimal point, allows all (n, k, d) parameters, systematic and exact-regenerating. Also, the computational overhead for regenerating recovery and MDS recover are almost the same with RS code.

The only drawback is, as all MBR codes have, it adds more storage overhead than RS code. I.e. each node needs to store more data than in RS code, and those extra data won’t help data reliability.

In the end, if the extra data overhead is tolerable, MBR code can reduce recovery bandwidth to very low. See paper for the detailed bounds. For 9+9 code, when d=9, the bandwidth ratio compared to RS code is 0.2, storage overhead expansion ratio is 1.8. For d=16, they are ~0.15 and ~1.3.

Row-wise MDS Regenerating Array Code

The paper Explicit constructions of high-rate MDS array codes with optimal repair bandwidth gives an totally different regenerating code construct. Each node stores an array of symbols. Each row across all nodes has a sligtly different coding matrix. The result is, each row independently is an MDS code (actually a RS code), i.e. supporting repair any r failure from any k nodes left. The regenerating recovery is by co-work through rows. Helper nodes read multiple rows, sum them up, and send to the replacement node. The replacement node then decode the corresponding rows out.

1. Explicit constructions of high-rate MDS array codes with optimal repair bandwidth [2016, 18 refs]

https://arxiv.org/pdf/1604.00454.pdf

1. very good paper. it is the row-wise MDS code able to regenerating 1 node failure, with 1/paritiy_count bandwidth per node

2. highlights

1. limitations:

1. regenerating 1 node failure. access all n-1 surviving nodes

2. on theory it supports any number of parity fragments. this paper gives 3

3. row count must be parity_count^(data_frag_count + parity_count). it can be very high for long codes

2. advantages

1. row-wise MDS.

2. the computation complexity for regenerating is low

1. sender side do an extra add for 1/parity_count of the data size

2. receiver side do matrix inversion for 1/parity_count of data size

3. if we set block size of plain EC the same as the per row size here,

I think this coding schema can be very useful

4. update complexity is low because it is row-wise

5. the coding matrix is very easy to construct

3. disadvantages

1. need r^n rows on each node, this can be too large for long codes, such as 9+9

4. referenced other papers who allow all parameters

1. Minimum Storage Regenerating Codes For All Parameters [2016, 14 refs]

https://arxiv.org/pdf/1602.04496.pdf

1. said to allow all parameters

2. Optimal Rebuilding of Multiple Erasures in MDS Codes [2016, 8 refs]

https://arxiv.org/pdf/1603.01213.pdf

1. allow regenerating multiple node failures

3. these papers have their limitations: rely on existential lemmas in large finite fields,

e.g., the Schwartz-Zippel lemma or Combinatorial Nullstellensatz

For advantages, the code is systematic and exact-regenerating. There is no extra storage overhead than RS code. The row-wise MDS allows it to do MDS recovery on each row independently, which is more flexible, and not like other codes which may force you to decode entire array at once. The regenerating recovery, similarly, decodes r rows each time. This may benefit reconstruct reads. The regenerating recovery has relatively low computational overhead when the code has few fragments: (r^n rows / r) * matrix_inversion(r*r). The transimitted bandwidth for recovery is 1/r of RS code. Another major advantage is, because each row is independently coded, the data update cost is very low, per-row based, i.e. “optimal update” as said in paper.

The disadvantages are, when the code has more fragments, i.e. larger n or r, the required row count per node r^n grows very quickly. Actually, for 9+9 code, this construct is not even feasible. For short code however, it is ok.

In summary, this code construct applies for shorter codes (i.e. smaller n and r). While “Optimal MSR Code by Product-Matrix” applies for longer codes (needs r almost the same large as k) in contrary. Besides, I have written a python implementation for the two codes in galois field, see here.

Simple Regenerating Code

The paper Simple Regenerating Codes: Network Coding for Cloud Storage proposed a very simple construct of regenerating code. It combines first RS code and than wrap it by XOR. For regenerating recovery, it only needs fixed amount of bandwidth transimtted, from fixed number of nodes (~4 to 6 nodes), no matter the code length (i.e. different (n, k)). This is an interesting property. For disadvantage, it add storage overhead by 50%, compared to using RS code only. The code is very simple. And there is project using it in Hadoop.

1. Simple Regenerating Codes: Network Coding for Cloud Storage [2011, 140 refs]

https://arxiv.org/pdf/1109.0264.pdf

1. good to read. it use first Reed-Solomn code and then a 3-row XOR array code.

but cross placing chunks, it can do regenerating. If you are willing to give

off 1/3 storage space, the code is very good to implement a regenerating code.

it is being tried on Hadoop.

2. referred by "HDFS-RAID使用Erasure Code来实现HDFS的数据冗余"

http://blog.csdn.net/wk022/article/details/49506643

1. As hadoop is trying to use simple regenerating code to improve EC

https://issues.apache.org/jira/browse/HDFS-3544

2. another for hadoop: Locally Repairable Codes, (LRC)

http://smahesh.com/HadoopUSC/ - XORing Elephants: Novel Erasure Codes for Big Data

3. highlights

1. advantages

1. super simple

2. only needs XOR for regenerating repair, which is much faster than matrix inversion decode

3. regenerating node repair needs constant d==4 other nodes. needs constant 6 chunks (each size M/2/k).

when k is large, the bandwidth saving is significant

2. disadvantages

1. need extra 1/3 storage space

Others

Most regenerating code limits the d>=2k-2, and they can do regenerating recovery only one 1 node is lost. Below paper however gives MSR code construct by all parameters (n, k, d). There are disadvantages though, see details below.

1. Minimum Storage Regenerating Codes For All Parameters [2016, 14 refs]

https://arxiv.org/abs/1602.04496

1. using interference alignment, allow MSR code for all (n, k, d) parameters, which is know to be

impossible previously. but this papper did it by limit to systematic-repair only, need large row count

on each node, and need large galois field size

2. highlights

1. disadvantages

1. limited to systematic-repair, i.e. only repair failed data fragments

2. need a=n^k rows on each node. for long codes like 9+9, row_count=18^9 which can be impossible

3. need very large galois field size, which is unfavorable for real implementation

2. advantages

1. MSR code for all (n, k, d), which is previously known to be impossible in some case.

but systematic-repair only and large galois fields size made it possible

Usually regenerating recovery only fix 1 node, i.e. obtain only one symbol. This paper gives a way to simultaneously rebuild multiple symbols. There are other issues though, see details below.

1. Optimal Rebuilding of Multiple Erasures in MDS Codes [2016, 8 refs]

https://arxiv.org/abs/1603.01213

1. improved from Zigzag code to be able to regenerating multiple erasures.

very few regenerating code can do this

2. highlights

1. using previous work Zigzag codes by same authors

2. advantages

1. besides recover erasured nodes, this code is able to correct errors together

but, isn't checksum the cheaper way to do it? (unless we want to save the space)

2. regenerating code to rebuild multiple erasures simultaneously, this is the main feature.

but for a single node, what's the purpose to obtain all the erasured fragments?

3. disadvantages

1. limited to systematic-repair only

2. need r^(k-1) rows on each node, which is relatively too big

3. need large enough galois finite field

Applying in Production

Regenerating code is reducing recovery bandwith transimitted, with additional computational overhead. To use regenerating code in production systems, there are several aspects to consider

- Is bandwidth already cheap and fast enough, so regenerating code doesn’t actually save much?

- Is network already fast enough, so even bandwith is reduced, recovery latency is not improved much, thus to improve reliability?

- How many traffic is by recoverying a node, or doing reconstruct reads? I.e. is the portion of traffic helped by regenerating code too small?

- How many cases we have only 1 or 2 nodes down, so that regenerating recovery takes place, rather than falling back to MDS recovery?

- How much is the computational overhead compared to the gain by reducing bandwith? RS code implementation has many optimizations; also need to take that into consideration.

- Will the computational overhead make recovery slower? Also, regenerating recovery ususally issues more IOs, will that cause trouble?

- Talk about reduce bandwith, are other engineering improvements more effective than regenerating code? For example, compression before transimit, inline EC instead of write replicas and then fetch and EC them again, tweak the write-amplification introduced by log-strtuctured anyting, improve data placement & migration logic to reduce migration churn, etc. Besides, is upgrading hardware even cheaper than spend dev hours to build software techniques?

- A disadvantage of regenerating code is that it usually can only repair 1 fragment each time. RS code, although cost more bandwidth, can repair all fragments in one run. With multiple fragment failure, the repair-in-one-run and replicate fragments out way of RS code may actually save more bandwidth. Need to check that in production.

Build new technology from paper to real production is no easy work. There are gaps between each steps. Each gap involves great work.

- Discover the new technology from paper or other source. Understand the math. Compare contemporary works and find which applicable to our production. Build simple (python) demo implementations.

- Investigate the real production envronments. Collect the numbers, answer questions, and use data-driven to proove the technology can actually bring more benefits than overheads. Compare other alternative engineering improvments and proove the new technology is the best.

- Investigate into the code, propose the complete design and solution, propose the necessary code changes.

- Pitching management-level to buy-in the new technology solution and get the approval and dev resources.

- … Now we entered the feature development cycle, as described in previous articles. It’s long way too. And don’t forget post-validations …

Searching the Coding Matrix

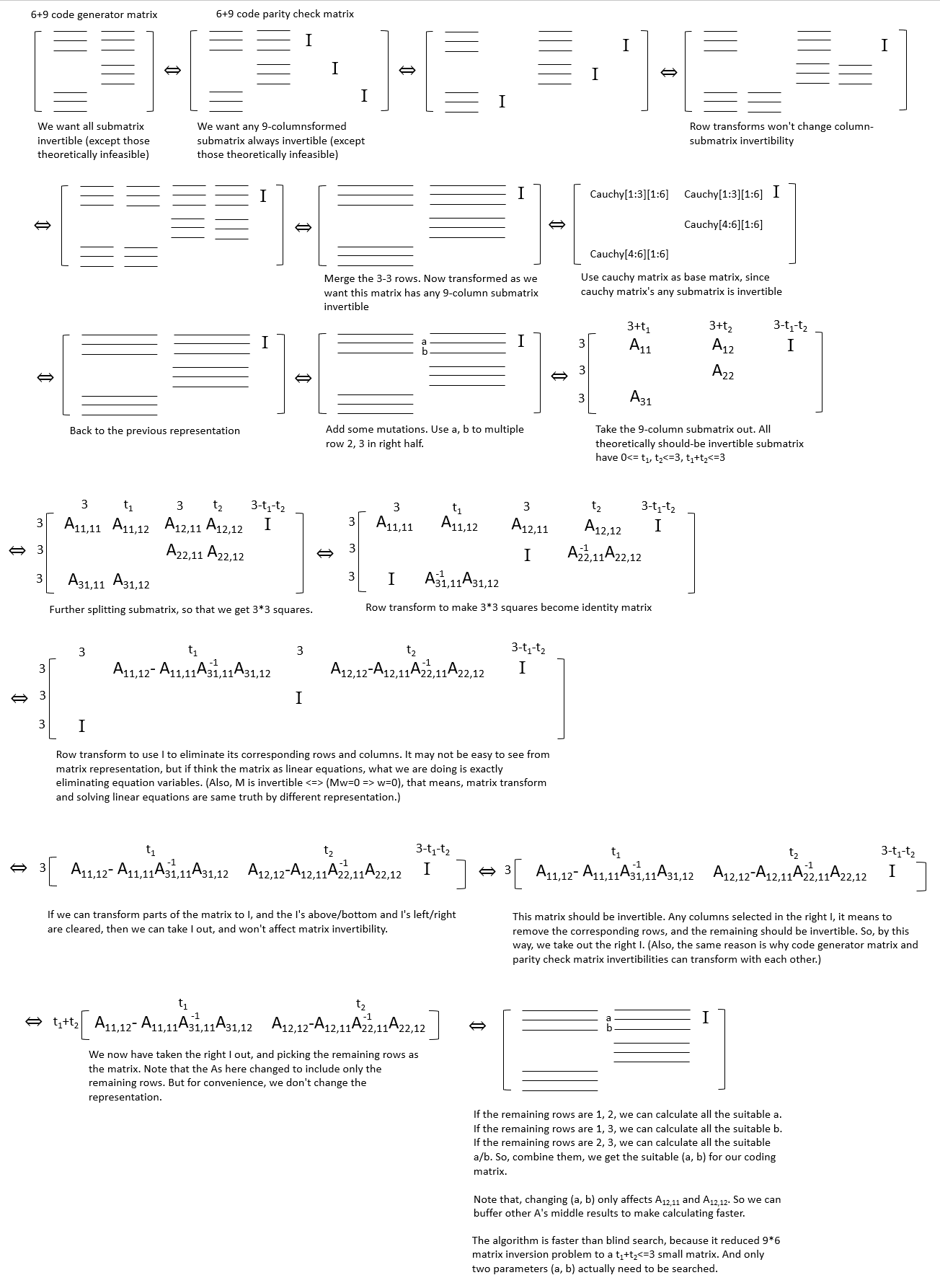

Usually, we can deduce coding matrix from Vandermonde matrix (any column vector formed square submatrix is always invertible), and Cauchy matrix (any submatrix is always invertible). Cauchy matrix based coding matrix may usually have more computational overhead.

But, search coding matrix which has holes in it, or in another word has parities covering overlapped data symbols, can be harder. Below I present one of my ways to mathatically search the possible coding matrix.

In general, setting more constraints, and applying various math results can reduce the search range or matrix size. Some random search algorithms such as Simulated Annealing can be used. And, running things in parallel on MapReduce can help the search. If we have supercomputers, we can search it their. Supercomputers make up the short of math. And sometime the math is even too complex to be possible. I think that’s why they are super important in all sorts of engineerings and sciences.

Post Notes

Worth attention that, regenerating code doesn’t reduce disk IO count, doesn’t reduce network IO count. What it does is to reduce only the network traffic. And it needs more CPU, and cannot repair more than one failures at once (where RS code can repair all and replicate to save bandwidth too).

There are other families of codes, which can be more attractive for storage systems:

- Locality codes such as Pyramid Codes / LRC that reduce IO count thus lower down network traffic two

- XOR based codes, too many types, which have low CPU overhead, faster recover speed and fan-in; but usually cannot tolerate too many failures, or they need extra space.

Create an Issue or comment below