Vision and Strategy to the Storage Landscape

(Below are generated by AI translation. The original article was written in Chinese. See Vision and Strategy to the Storage Landscape (Chinese Simplified).)

Vision & Strategy: Insight, Foresight, and Strategy

Vision and Strategy begin with asking questions: where should we be in 1 year, 3-5 years, or even 10 years? What should the team and departments be doing, and how should they be working? Vision is not about chasing the latest technology trends and learning or applying them. Vision means that the “stakeholders” need to correctly predict technology trends, determine investment directions, and support their conclusions with data-driven and systematic analysis.

Overall, this way of thinking is closer to that of a Product Manager, Business Analytics, and Market Research, rather than technical development work. Of course, internally, it also requires a solid technical foundation (see A Holistic View of Distributed Storage Architecture and Design Space). Externally, it requires surveying the market and competitors, as well as our position. Looking forward, it requires forecasting trends and scale.

Why are Vision and Strategy needed? There are many reasons:

-

Career Development: As one advances in level, the expectation for the position gradually shifts from receiving input to providing output. For example, a junior individual developer focuses on completing assigned tasks and receives input from managers. In contrast, an experienced individual developer often needs to formulate project strategies (including technical ones) and regularly provide input to managers, such as possible innovation directions and team development opportunities. The work of a manager is closer to investment (see the Market Analysis section); insight into future trends and executing the right strategies is one of their core responsibilities. See [95].

-

Long-term Planning: Higher positions are expected to manage longer time horizons. For example, a junior developer typically needs to plan for the next 3 months, while an experienced individual developer often needs to plan for the next year. A manager plans even further ahead, often looking 3 to 5 years into the future. Beyond project management plans, this involves foresight and strategy for team development. See [95].

-

Leadership: Leadership means attracting followers through vision and charisma in an environment of equal communication (Manage by influence not authority). Developing leadership requires the individual to become a Visionary or Thought Leader. When others interact with the individual, they should always feel inspired and motivated. Leadership is also one of the requirements for the position of manager. See [96].

-

System Architecture: A good system architecture often lasts more than 10 years, and considering the slower iteration speed of storage systems (data must not be corrupted), development may take 5 years (until maturity and stability). Architects essentially work with a future-oriented approach, needing to understand future market demands and make decisions based on future technological developments. In particular, hardware capabilities develop at an exponential rate. On the other hand, architectural decisions need to be mapped to financial metrics.

-

Innovation: Innovation is a daily task (see the Market Analysis section) and also involves finding growth opportunities for the team. Innovation includes both identifying technological development trends and analyzing changes in market demand, meaning having insight into taking existing systems “a step further,” as well as questioning what the best storage system should be (Gap Analysis). Finding innovation requires Vision, and implementing innovation requires Strategy.

This article mainly focuses on storage systems within the context of the (cloud) storage industry. The following sections will sequentially explain “Some Methodologies,” “Understanding Stock Prices,” “The Storage System Market,” “Market Analysis,” “Hardware in Storage Systems,” and “Case Study: EBOX.”

-

The Methodology section will build the thinking framework for Vision and Strategy.

-

Stock Price section will analyze its principles, understand the company’s goals, and map them to the team.

-

Market section provides an overview of the competitive landscape of storage systems, analyzing key market characteristics, disruptive innovations, and value.

-

Hardware section models its capabilities and development speed, with an in-depth look at the key points.

-

Case Analysis section will apply the analytical methods of this article through an example, yielding many interesting conclusions.

Table of Contents

- Some Methodologies

- Understanding Stock Price

- Storage System Market

- About Market Analysis

- Hardware in Storage Systems

- Case Study: EBOX

- Summary

- References

Some Methodologies

Vision and Strategy involve a series of considerations about the future and trends, as well as an understanding of enterprise architecture to ensure projects achieve tangible returns on investment. More importantly, it requires systematic analysis and data to support the conclusions of predictions. Overall, this way of thinking is closer to that of a Product Manager, Business Analytics, and Market Research, rather than just technical development work.

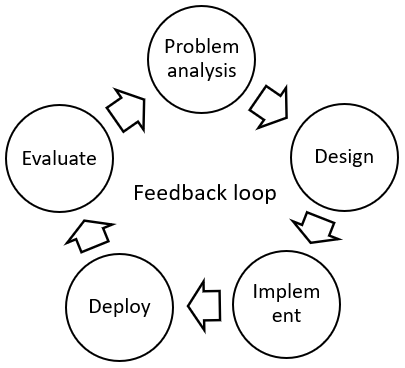

This chapter introduces methodologies related to Vision and Strategy. It will sequentially cover Critical Thinking, Case Interview, Strategic Thinking, Business Acumen, and information gathering. The content mainly outlines the general framework; what is important are the thinking, practice, and experience.

Critical Thinking

Critical Thinking is not closely related to critical thinking in Chinese; it is closer to Problem Solving. “Critical” is more akin to Critical Path. The reason for using the English term is that direct translation into Chinese somewhat distorts the meaning, and the same applies below. LinkedIn’s Critical Thinking course [91] is a good learning resource. The course contains comprehensive knowledge; this article only lists interesting or important points, the same below.

Critical Thinking 的(部分)重点:

-

Address the root cause rather than the symptoms. Do not start directly on the task assigned by your boss. First, trace upstream and downstream, and stakeholders, to find the real, root cause problem that needs to be solved. Next is defining the problem, where the most important parts are the Problem Statement and defining the goal. Before starting, present your definition to other teams and members to see if it is reasonable.

-

Work efficiently. You need to repeatedly switch between the High Road and Low Road, zooming in and out of perspectives. The High Road is a bird’s-eye view; according to the 20/80 Rule, find the effective 20%, Don’t Boil the Ocean. The Low Road is a ground-level view, such as analyzing specific data. The key is to return to the High Road from time to time to verify its business value (Business Impact), Don’t Polish the Dirt.

-

“Critical” Path. The path you take to solve a problem should be a critical path (graph theory), where each task node is necessary and not redundant (related to the following MECE). Doing work that the boss doesn’t care about is meaningless. What you provide is a professional service, and purchasing your service is expensive, so don’t waste the client’s funds. Individual developers should not only take the Low Road. Finally, carefully consider the priority of tasks.

-

Some Tools. For example, the “5 Whys” is used to trace root causes. The “Seven So-whats” is used to infer the consequences of actions. “We did it this way before” is a “bad smell” that triggers Critical Thinking—does yesterday’s strategy fit today? The First Principles, more plainly, means breaking down problems (Top-down) and then adding up (Bottom-up) to calculate reasonably; this is similar to systems analysis methods. Shadow Practice, such as Gartner reports, where you test if you can independently analyze and reach the same conclusions. Switching Perspectives, for example, explaining to someone else and seeing how they reframe your problem. Heuristic questions, such as looking back over the past 10 years, if you were to start over, what would you do differently? Another heuristic question is how to double your output metrics (Performance Metrics)?

-

To some extent, attention to both high-level and detail is necessary. High-level abstract thinking has great power, but if you live in the “cloud” for too long, you can easily fall into stereotypes and be unable to self-correct (something managers need to avoid). Self-correction requires returning to the detail level to test existing assumptions; in other words, it is the First Principles.

Case Interview

Case Interview [92] belongs to the field of Business Analytics or Business Consulting and is a part of the interview process for consulting firms like McKinsey. However, as the book Case Interview Secret by Victor Cheng explains, it covers a large number of frameworks and examples for analyzing business problems, which is very beneficial, with the “interview” aspect being secondary.

Some Key Points of Case Interview:

-

Estimating Using Proxy. Quick estimation and mental calculation are fundamental requirements in business consulting. There are various estimation methods, among which the Proxy method is quite interesting, such as estimating the revenue of a newly opened store by counting street foot traffic or the occupancy rate of nearby stores. The Proxy method can be taken further by stratifying according to demographics, identifying Proxy variables, breaking down the problem, and switching to another Proxy for cross-verification.

-

Mindset. The thinking approach in business consulting overlaps significantly with Critical Thinking, for example, Don’t Boil the Ocean, time is expensive, and professional services. On the other hand, it involves being an Independent Problem Solver: if you were placed alone in a department of a Fortune 500 company, could you convince the client (as in the following Conclusiveness), solve problems, and maintain the employer’s image? Strong Soft Skills are essential.

-

Issue Tree Framework. A classic analytical method in Case Interviews is the Issue Tree Framework, such as the Profitability framework. It requires first establishing a hypothesis, then breaking down the problem layer by layer. The breakdown must satisfy the MECE test and the Conclusiveness test; the former means no overlap and no omission, while the latter means if all branches are True, the conclusion of the parent node cannot be denied. The analysis proceeds layer by layer down the tree, then aggregates back up to the root, often dynamically adjusting the tree structure during the process. There are many templates for the Issue Tree Framework, but it is often customized according to the problem when used.

Strategic Thinking

Strategic Thinking is used to formulate company strategies, especially long-term strategies, and is often linked with Decision Making. It is the action plan and navigation map for where the company should be positioned 3 to 5 years, or even 10 years, into the future. LinkedIn’s Strategic Thinking course [93] provides more explanation.

Some key points of Strategic Thinking:

-

Win the Game. Compared to Critical Thinking, which only involves “me” and “the problem,” Strategic Thinking requires winning the “game,” adding “opponents” into the picture. In the context of market analysis, its perspective is closer to Porter’s Five Forces Analysis.

-

Observation. Strategic Thinking first requires observing people and opponents, paying attention not only to trends but also to micro-trends. One key point is Bad Small, which applies not only to programming but also to organizations and culture, such as hearing phrases like “We’ve never done it this way” or “We used to do it this way.” Another key point is Not be Surprised. Surprise is a term in management; if you feel surprised, it means your observation was insufficient. After observation comes reflection, It doesn’t take time, it takes space (see course section Embrace the strategic thinking mindset).

-

Action. Deciding to do something means deciding not to do something else. Doing nothing is also a decision. Attention should be paid to the multiplicative effect in the value chain: your time -> investment in tasks -> your strategy -> effectiveness at the company level. Ask yourself, 3 to 5 years from now, how will “I” win the game, and where will “I” be? Action means establishing a breakdown of tasks from long-term to short-term (related to the Issue Tree Framework), ultimately mapping to tactics, i.e., specific tasks executable daily. During execution, occasionally switch between High Road and Low Road (related to Critical Thinking).

-

Making Informed Strategy. A good strategy does not need to be innovative; the focus of strategy is decision making. First, consider market trends and the classic Porter Five Forces analysis. Pay attention to collecting perspectives from different sources, including both new and old groups, especially from different viewpoints. Map out your assets and allies. Map out your constraints, especially structural obstacles. Also conduct a SWOT analysis. Place them on the previous action map, which needs to be realistic and attainable.

-

Gaining Support. How to gain support from your boss, colleagues, and employees? Don’t rush to announce your strategy or plan in meetings; there is a lot of work beforehand. First, systematically meet with stakeholders to discuss your plan, get feedback, and address questions. It is foreseeable that there will be a lot of opposition; the key is that you need to anticipate all possible objections (related to Not be Surprised), and reach consensus before the meeting using appropriate concessions and negotiation skills (related to BATNA). Finally, ensure all decisions and tasks are accountable, such as through email meeting summaries and regular timeline reviews.

-

Monitoring Execution Progress. Common project management is used in the strategy execution process. More importantly, set expectations and assumptions. The environment is constantly changing, so frequently re-examine assumptions and ask if there are better alternatives. Reviews should be conducted upfront and retroactively. High-risk parts require earlier and more frequent execution.

Business Acumen

Business Acumen explains how business is conducted from the company’s perspective, as well as how to advance and optimize various parts (Pull the Lever). It covers reading financial reports, business models, strategies, operations, and more. LinkedIn’s Developing Business Acumen course [94] offers further elaboration.

Some key points related to this article in Business Acumen:

-

Financial Reports: Corresponding to company financial statements, the Profit & Loss statement (P&L statement) can be broken down layer by layer: Revenue -> minus COGS -> Gross Profit -> minus Operating Expenses (Operating Expense, SG&A) -> Operating Profit -> minus interest, taxes, depreciation, etc. -> Net Income. There are many adjustable “levers” (Pull the Lever) related to financial performance, corresponding to the Profitability Issue Tree Framework mentioned above. Some are long-term, such as R&D and facility construction, while others are short-term, like production cuts and layoffs. You can try to draw conclusions from past financial reports and compare them with the management reports (Financial Brief) released by the company.

-

Business Model: The business model defines how to profit from producing goods. From the perspective of raw material processing, there is the Value Chain; from the perspective of business growth, there is the Growth Strategy; from the investment perspective, there is ROI; and from the cost perspective, there are concepts such as CapEx, Fixed Cost, and Variable Cost.

-

Operation: The business model drives company strategy and personnel allocation, personnel drive operations, and ultimately financial performance is reflected in the financial reports. Centered around operations, first is Strategy, as mentioned earlier. The company’s investment portfolio forms the Initiative Pipeline, which unfolds step by step to realize the company’s future. Marketing strategy selects and helps the company control customers. R&D is often considered together with Mergers and Acquisitions, the latter saving time to go public and allowing market share acquisition. In competition, protecting products requires strategy, such as rapid release, or copyrights and patents. Personnel strategy involves how to find personnel, training, organizational structure, turnover rate, etc. Observing the open recruitment positions at various job levels in a company can reveal its personnel strategy, which can be used to infer its business strategy.

Information gathering

This section briefly describes how to collect market information to support Vision and Strategy analysis.

-

“Underwater” information. Many cutting-edge and valuable pieces of information are often unpublished. For example, paper authors often know about a valuable research direction a year in advance and start working on it. If you only read the papers, you will learn about this information a year later. The same applies to university labs, corporate research institutes, open-source communities, and so on. Obtaining “underwater” information relies more on social interactions, participating in various conferences, face-to-face communication, finding collaborators, and mutual benefits. On the other hand, companies have real customers and supply chains, from which it is easier to obtain potential market trends or even make decisions.

-

Investment. Investment news can provide insights into new technology trends. Compared to reading papers, technologies that receive investment have been “money-validated”, and the amount of investment can be used to gauge their strength. Common investment news includes startups, financing rounds, acquisitions, etc., or seeing a new company start to have “money” to write articles in various media to promote itself. On the other hand, promising papers often quickly receive funding and establish startups, or at least launch websites.

-

Research reports. Many market research firms are happy to predict future directions, such as Gartner and IDC. Although companies may pay for promotion, well-funded companies at least indicate that the direction has development prospects. More examples will be seen in the Storage Systems Market chapter.

Understanding Stock Price

From the company’s perspective, an extremely important goal is the growth of the stock price (sometimes even the sole goal). What kind of stock price growth is reasonable? How can stock price growth be mapped to actual products? For departments or teams, what kind of objectives need to be achieved to support the stock price?

This goal is further broken down into a 3-5 year plan for the team, mapped to Vision and Strategy. In other words, stock price analysis can tell the team how well they should perform. Although stock price may seem unrelated to Vision and Strategy, it is actually an excellent entry point.

What constitutes the stock price

The key to understanding stock prices is the Price-Earnings Ratio (P/E). The English term is easier to understand: the ratio of stock price to earnings.

- Think of a stock as a passbook, and the reciprocal of the P/E ratio is its interest rate. Here, the stock price is the Share price.

Earnings correspond to Earnings Per Share (EPS). The English term is straightforward: earnings per share. It is obtained by dividing the company’s Net Income by the total number of shares (Average common shares).

- In the formula, preferred dividends are the dividends of preferred stock. They can be ignored, as they are usually small in amount and rarely used [48].

By substituting earnings per share into the price-to-earnings ratio formula, we can find:

-

The reciprocal of the price-to-earnings ratio is the company’s earnings divided by its market capitalization (the sum of all shares). Imagine the company as a huge passbook; the reciprocal of the P/E ratio is the interest rate of the “company passbook.”

-

In other words, the P/E ratio calculates how many years the “company passbook” needs for its “interest” to cover the market value. That is, the P/E ratio indicates how many years it takes for the company to break even.

How high is the “company passbook” interest rate mentioned above in reality? Take MSFT [49] as an example:

-

Interest rate = 1 / P/E = 1 / 37.32 = 2.68%.

-

In comparison, the 30-year US Treasury yield is 4.6%[50]. (The 3-month US Treasury yield during the same period is even higher, about 5.3%.)

The US Treasury yield is even much higher than the interest rate of the above “company passbook.” Relative to the stock price, the company’s profitability is not as good as purchasing risk-free government bonds. Why?

-

Traders believe that although the company’s current profitability is insufficient, the stock price may appreciate in the future, so they continue to buy, causing the stock price to rise and the P/E ratio to increase.

-

In other words, the expectation of stock price appreciation is reflected in the price-to-earnings ratio. Or, the price-to-earnings ratio reflects the company’s future expectations, that is, the expectation of stock price increase [52].

-

If the company’s outlook improves, the stock is bought more, and the price-to-earnings ratio rises. Conversely, if the company performs poorly, the price-to-earnings ratio falls. If the company is expected to maintain current performance, the price-to-earnings ratio should remain stable.

On the other hand, net profit can be further broken down, mapping to market size:

-

Net profit equals the company’s revenue multiplied by net profit margin.

-

Revenue can continue to be broken down into market size and market share.

From this, the composition of the stock price can be summarized:

-

First is the company’s profitability, which depends on market size, market share, and net profit margin. Profit compared to the company’s market value is reflected as an interest rate, corresponding to the inverse of the price-to-earnings ratio.

-

Then there is the traders’ expectation for the company’s future, also reflected by the price-to-earnings ratio. Comparing this to the risk-free government bond rate can indicate the strength of belief.

How fast should the stock price rise?

How fast should the stock price increase? It should cover the opportunity cost and risk premium; otherwise, traders would choose to sell the stock and buy risk-free government bonds. Besides price appreciation, another return to stockholders is dividends.

-

Opportunity cost corresponds to the risk-free rate, usually measured by short-term government bond yields.

-

Dividend yield is the dividend income per share as a proportion of the stock price.

-

Risk Premium, stocks carry higher risk than government bonds, so traders demand extra returns.

First, let’s look at how the dividend yield is calculated. Dividends come from the company’s net profit, which is proportionally allocated and distributed per share.

-

For simplicity, the dividend yield can generally be directly checked[51] on stock trading websites.

-

Dividend Yield can be broken down into the EPS payout ratio divided by the price-to-earnings ratio. The higher the P/E ratio, the lower the stock yield.

-

EPS Payout Ratio refers to the proportion of a company’s net profit distributed as dividends; it is also equal to the proportion of earnings per share paid out as dividends. The EPS payout ratio is generally not affected by stock price fluctuations. It can also be found on stock trading websites.

Next, let’s look at the calculation of the risk premium, here using the common CAPM [55] asset pricing model.

-

Risk Premium can be obtained by multiplying the Beta coefficient by the equity risk premium (ERP).

-

Beta Coefficient reflects the volatility of a stock’s price relative to the market average [53], and can usually be directly found on stock trading websites [49].

-

Equity Risk Premium is obtained by subtracting the risk-free rate from the expected market return. The expected market return can be derived from an index fund, commonly using the S&P 500 [56]. The risk-free rate was discussed earlier.

-

In CAPM, the Cost of Equity is exactly the sum of the previously mentioned risk-free rate and the risk premium here. The cost of equity does not depend on the stock price but is determined by the market environment.

Applying the previous formula, we can now calculate how fast the stock price should rise. Taking MSFT stock as an example:

-

The risk-free rate is taken from the 30-year US Treasury bond, 4.6%. The dividend yield is directly queried, taken as 0.72%. The Beta coefficient is directly queried, taken as 0.89. The expected market return is taken as the average growth rate of the US S&P 500 over the past five years, 12.5% (remarkable).

-

Share price growth (annual) = 0.046 - 0.0072 + 0.89 * (0.125 - 0.046) = 10.9%. It can be seen that the share price needs to increase by 10.9% in one year to satisfy the trader’s cost-benefit balance.

-

The relatively high share price growth requirement comes partly from the higher US Treasury interest rate that year and partly from the strong upward trend of the US stock market.

It can be seen that in a high-interest-rate bull market, traders have stringent profit expectations for companies. If the share price does not meet expectations, traders will incur losses due to opportunity costs or risk premiums, leading them to sell the stock, which lowers the P/E ratio and share price. Where is the final share price equilibrium point? Assuming the share price no longer changes:

-

Continuing from the previous values, the stock price is set at $420. Other assumptions remain the same. Assuming the dividend payout ratio remains unchanged (26.9%) and earnings per share remain unchanged ($11.25). A lower stock price and lower P/E ratio will increase the dividend yield, thereby balancing opportunity cost and risk premium.

-

Stable Point Stock Price Share price = (0.0072 * 420) / (0.046 + 0.89 * (0.125 - 0.046)) = 26.0. At this point, the P/E ratio is 2.31, and the dividend yield is 11.6%. At this point, the dividend yield exactly equals the cost of equity of the stock, 11.6%.

-

Besides the low stock price, this appears to be a good stock. Indeed, there are many similar real stocks [57] with low stock prices, high dividend yields, and low P/E ratios. Note that this article is for theoretical analysis only, does not constitute any prediction of stock price movements, and does not constitute any investment advice.

There are some additional inferences:

-

Stock price increase expectations are unrelated to company market value. Assuming dividend yield is ignored, which is usually very low for tech companies. From the formula above, it can be seen that the stock price increase demanded by traders depends on the market background interest rate and risk. The company’s stock price, market value, or even market capitalization does not appear in the formula (ignoring dividend yield).

-

High stock price is a negative factor. Now consider dividend yield. It depends on company factors, determined by earnings per share and dividend payout ratio. Stock price appears in the denominator, which lowers the dividend yield, making it harder to meet the expected stock price increase.

-

High P/E ratio is a negative factor. Similarly, because it appears in the denominator of the dividend yield formula. A high P/E ratio means the company’s profitability is insufficient, but the stock price is relatively high.

This section has shown, from the trader’s perspective, how fast the stock price is expected to rise to maintain stability in the P/E ratio and stock price. So, from the company’s perspective, how should it promote stock price increases to meet expectations?

What Drives Stock Price Growth

As mentioned earlier, the price-to-earnings ratio reflects traders’ expectations for the company’s future. To maintain the company’s P/E ratio and stock price stability, how can the stock price be encouraged to rise as expected? From the formula that constitutes the stock:

-

First, the stock price needs to rise sufficiently to outperform the risk-free rate and risk premium, that is, the cost of equity. Technology companies usually have very low dividend yields.

-

The driving force behind stock price growth comes from the company’s net profit, and net profit needs to increase proportionally with the stock price to support the stock price.

- Net profit is broken down into market size, market share, and net profit margin, which are the directions for seeking growth.

- Dream building. Even if the company’s profitability remains unchanged, by weaving traders’ optimistic expectations for the future, the price-to-earnings ratio can be driven up, thereby raising the stock price.

From the company’s perspective, the best strategy is to seek high-growth emerging markets:

-

For example, the global cloud storage market is growing at a rate of over 20% annually [46]. Simply entering this market is expected to meet the previously mentioned 11.6% cost of equity. It doesn’t even require outperforming peers, similar to free-riding.

-

Compared to mature large enterprises, small emerging companies (SMBs) do not have the burden of an existing market. Instead, they have stronger momentum for stock price growth.

-

Technology, innovation, and new markets are necessary to sustain stock prices.

Secondly, companies can seek to increase market share:

-

Increasing market share means competing with rivals, and the company’s performance must be better than its peers. This is a challenging path.

-

On the other hand, this means that in a low-growth mature market, it is more difficult for companies to meet stock price growth expectations. Being large and mature is not necessarily an advantage.

The next direction is to improve net profit margin:

-

A good approach is to sell high value-added products, leverage comparative advantages, enhance technological levels, and increase market recognition.

-

Another approach is to seek economies of scale. As scale expands, fixed costs decrease, and net profit margin improves.

-

A common approach is cost reduction and efficiency improvement. When net profit margins are low, cost reduction and efficiency improvement are more effective. See the figure below.

In addition, companies can increase their price-to-earnings ratio by creating dreams:

-

The price-to-earnings ratio reflects traders’ expectations of the stock market. Creating dreams raises expectations and sells concepts without requiring improvements in the company’s profitability.

-

This method is suitable for businesses with large initial investments that have economies of scale or technological accumulation. However, once the dream is broken, the stock price can fall rapidly.

Finally, what does the stock price increase of a real company look like? Taking MSFT [58] as an example:

-

The company’s overall revenue grew by 17% year-over-year, while net profit grew even faster, reaching 20%. An outstanding performance.

-

Xbox revenue grew by 62%, Azure cloud services by 31%, Dynamics 365 by 23%, and Intelligent Cloud by 21%. They far exceeded the cost of equity at 11.6%.

-

In addition, Office, Windows, Search, and LinkedIn all showed good growth, ranging between 10% and 15%.

Team Goals

Stock price analysis helps build a top-down framework, from the company’s top level to specific teams, clarifying what goals to work towards:

-

Company needs the stock price increase to meet traders’ expectations and cover the cost of equity (how fast the stock price should rise).

-

Growth goals decomposed into market size, market share, net profit margin, and dream-building (what drives stock price growth).

-

For a specific team, a plan needs to be developed to achieve the above growth.

-

For a specific product under team management, its market size, market share, net profit margin, etc., need to reach the growth targets.

What exactly is the growth target?

-

Based on the previous analysis, taking MSFT as an example, the growth target is an annual 10.9%.

-

For other companies, calculate according to the previous formula

4.6% - 股息收益率 + Beta 系数 * (12.5% - 4.6%). Dividend yield and Beta coefficient are related to the stock and can be directly queried [49]. The calculation result is usually around 11% (internet technology companies). -

For products occupying emerging markets, it is even required that their growth exceeds the above growth targets to compensate for the company’s products in the market decline phase.

-

Ordinary products should reach the above targets as the company’s average. They constitute the majority of the company. However, the average requirement of 11% is not low.

-

Products below the average are possible. This means they are in the market decline phase, employees face the risk of layoffs, and career development opportunities are limited.

-

Essentially, the growth target is to outperform the stock market index and government bond yields.

Final question:

-

For individual employees, how to achieve at least an 11% average annual growth? Note that this is required every year. (Today, are you holding the company back?)

-

For teams, how to develop a 3-5 year plan to ensure 11% or more growth each year? This is where Vision and Strategy come into play.

Note

This article is a personal, non-professional analysis. All content expresses the author’s personal views and does not constitute any investment advice regarding the assets mentioned.

The Market of Storage Systems

Business strategy analysis can typically be broken down into the levels of customers, products, company, and competitors, with further depth (see the diagram below). Customers, products, and competitors can be summarized as the “market” landscape. This chapter will provide an overview of the storage system market, listing the main market segments, product functions, and participants. Subsequent chapters will delve deeper.

In an ever-changing market landscape, where do we stand? What will the market map look like in 3-5 years or 10 years, and where should we be? Understanding the market is the foundation of Vision and Strategy. Focusing on the market, we can gradually reveal its structure and growth potential, what constitutes value, demand, evolution cycles, and the driving factors behind them.

(See details below)

Classification

The first question is how to classify the storage market? This chapter uses the following classification to organize the content. The letters before the subsection titles correspond to the classification groups.

-

A. The classic classification is cloud storage and primary storage. Cloud storage comes from the public cloud. The term primary storage [49] is mostly used by Gartner, referring to storage systems deployed on the customer’s premises that serve critical data, usually from traditional storage vendors. Primary storage is also called “enterprise storage.” Additionally, another major category of storage used locally by enterprises is backup and archive systems.

-

B. According to the interface used, storage can be classified as object, block, and file systems. Object storage services consist of immutable BLOBs queried by Key, typically images, videos, virtual machine images, backup images, etc. Block storage is usually used by virtual machines as their disk mounts. File storage has a long history, storing directories and files that users can directly use, commonly including HDFS, NFS, SMB, and others. Additionally, databases can also be considered storage.

-

C. According to storage media, storage can be classified as SSD, HDD, and tape systems. SSD storage is expensive and high-performance, often used for file systems and block storage. HDD storage is cheap and general-purpose, often used for object storage or storing cold data. Tape storage is generally used for archival storage. Additionally, there is fully in-memory storage, typically used as cache or analytical databases.

The above classification of the storage market is classic and commonly used, also convenient for explaining in this chapter. However, in reality, products in the storage market are more organic and intertwined to penetrate each other’s markets and gain competitive advantages. For example:

-

A. Cloud storage also sells edge storage deployed near customers, such as AWS S3 Express. Main storage also offers cloud-deployed and cloud offloading versions, such as NetApp ONTAP. Backup and archival are especially cost-effective in cloud storage, such as AWS Glacier.

-

B. Object storage is becoming increasingly like a file system, such as the AWS S3 Mountpoint that simulates a file system, supporting metadata and search on objects, and hierarchical object paths. Databases include products with Key-Value interfaces like RocksDB, while SQL databases often support unstructured data, similar to object storage. Block storage is not only used for virtual machine disks but can also provide page storage for databases. Additionally, the underlying layers of various storage systems can be unified into shared log storage, such as Azure Storage, Apple FoundationDB, and the Log is Database design.

-

C. SSD storage often offloads cold data to HDD storage to save the expensive cost of SSDs. HDD storage often uses SSDs as cache or write staging. Memory is used as cache and index for various storage media, and in-memory storage systems often support writing cold data or logs to SSD.

In addition, for simplicity, this chapter omits some minor classifications. For example,

-

Based on the size of user enterprises, the market can be classified into SMB, large enterprises, and special fields. This classification is based on the customer side.

-

Enterprise storage is also often classified as DAS, SAN, and NAS. This classification partially overlaps with object, block, and file storage.

-

Besides tape, archival storage can also use DNA technology, which is currently developing rapidly.

-

Cyberstorage is an emerging storage category in the context of ransomware, but it is more often integrated as a security feature within existing products.

-

Vector databases are an emerging type of database in the context of AI, while traditional databases often integrate vector support as well.

A. Cloud Storage

Regarding predicting the future direction of the market, consulting firms’ analysis reports are good sources of information (Gartner, IDC, etc.). Although the reports are paid, there are usually additional sources:

-

Leading companies are usually willing to provide free public versions as a form of self-promotion.

-

Blogs and reports, although not first-hand information, can also reflect the main content. Some bloggers have specialized channels.

-

Adding

filetype:pdfbefore a Google search can effectively find materials. -

Adding

"Licensed for Distribution"after a Google search can find publicly available Gartner documents. -

Switching between English and Chinese search engines, as well as Scribd, can find different content. Chinese communities may have some documents saved.

-

In addition, reading the user manuals of leading products can also help understand the main features and evaluation metrics in the field.

Fortune predicts the global cloud storage market size to be around $161B, with an annual growth rate of approximately 21.7% [46]. In comparison, the global data storage market size is around $218B, with an annual growth rate of about 17.1% [60]. It can be seen that:

-

The cloud storage market has an excellent growth rate. Combined with the Understanding Stock Prices section, it is clear that this growth rate is very favorable for supporting stock prices, without needing to rely heavily on squeezing competitors or cutting costs.

-

In the long term, data storage is trending towards being largely replaced by cloud storage. This is because the proportion of cloud storage is already high and its growth rate exceeds that of overall data storage. At least, this is what the forecasts indicate.

From Gartner’s Magic Quadrant for Cloud Infrastructure [61] (2024), the leading market participants can be identified:

-

Amazon AWS: A persistent leader. AWS has large-scale infrastructure globally, strong reliability, and an extensive ecosystem. AWS is the preferred choice for enterprises seeking scalability and security. However, its complex services can be challenging for new users.

-

Microsoft Azure: A leader. Azure benefits from hybrid cloud capabilities, deep integration with Microsoft products, and collaboration with AI leader OpenAI. Azure’s industry-specific solutions and collaborative strategy are attractive to enterprises. However, Azure faces scaling challenges and has received criticism regarding security.

-

Google GCP: A leader. Leading in AI/ML innovation, the Vertex AI platform is highly praised, and its cloud-native technologies are distinctive. In environmental sustainability and AI services, GCP is appealing to data-centric organizations. However, GCP falls short in enterprise support and traditional workload migration.

-

Oracle OCI: Leader. OCI excels in providing flexible multi-cloud and sovereign cloud solutions, attracting enterprises that require robust integration capabilities. Its investments in AI infrastructure and partnership with NVIDIA have solidified its market position. However, OCI’s generative AI services and resilient architecture remain insufficient.

-

Alibaba Cloud: Challenger. As a major player in the Asia-Pacific region, Alibaba Cloud leads in domestic e-commerce and AI services. Despite having an excellent partner ecosystem, Alibaba Cloud’s global expansion is constrained by geopolitical issues and infrastructure limitations.

-

IBM Cloud: Niche player. IBM leverages its strengths in hybrid cloud and enterprise-focused solutions, seamlessly integrating with Red Hat OpenShift. Its solutions are attractive to regulated industries. However, its product portfolio is fragmented, and its Edge strategy is underdeveloped.

-

Huawei Cloud: Niche participant. Huawei is a key player in emerging markets, with strengths in integrated cloud solutions for the telecommunications sector. It excels in AI/ML research and has achieved success in high-demand enterprise environments. However, geopolitical tensions and sanctions limit its global expansion.

-

Tencent Cloud: A participant in specific fields. Optimized for scalable and distributed applications, with unique advantages in social network integration. However, its global partner ecosystem is limited, and it lags behind global peers in maturity.

What key features should cloud storage provide? Gartner’s Cloud Infrastructure Scorecard [62] (2021) compares major public cloud providers, showing the list of categories as seen in the figure below. AWS’s strong capabilities are evident.

On the other hand, cloud storage can be viewed as gradually moving traditional storage functions to the cloud, benchmarking cloud storage against primary storage. From this perspective, what features should cloud storage have? Which features are already present in primary storage, and which might cloud storage develop in the future? What are the key metrics for measuring storage? See the next section on primary storage.

A. Primary Storage

This article corresponds primary storage to enterprise storage deployed locally under the cloud, serving critical data, which is a long-established traditional domain of storage. Its growth rate roughly corresponds to the overall storage market, as seen from [60] and its accompanying chart (previous section), with an annual growth rate of about 17.1%, gradually being eroded by cloud storage. Of course, in reality, primary storage has already deeply integrated with the cloud.

From Gartner’s Magic Quadrant for Primary Storage [59] (2024), the leading participants in this market can be identified:

-

Pure Storage: A persistent leader. Through Pure1, it provides users with proactive SLAs, benefiting IT operations and maintenance. The integrated control plane requires no external cloud communication or reliance on AIOps. The DirectFlash Module operates directly on raw flash memory, driving innovation in hardware, SLAs, and data management. However, Pure Storage lags in user diversification outside the US, lifecycle management plans increase array asset and support costs, and it does not support compute-storage separation.

-

NetApp: A leader. NetApp offers ransomware recovery guarantees and immutable snapshots. It simplifies IT operations through Keystone policies and Equinix Metal services. The BlueXP control plane provides sustainability monitoring to manage energy consumption and carbon emissions. However, NetApp does not offer competitive ransomware detection guarantees for block storage, its product line does not support larger 60TB/75TB SSD drives, and it does not support compute-storage separation.

-

IBM: Leader. IBM’s consumption plan offers unified pricing for product lifecycle and upgrades, providing guarantees for energy efficiency. Flash Grid supports partitioning, migration, continuous load optimization, and cross-platform functionality. However, IBM does not offer capacity-optimized QLC arrays, does not provide file services on block storage, and local flash deployments do not support performance and capacity separation.

-

HPE: Leader. HPE’s Alletra servers allow users to independently scale capacity and performance to save costs. GreenLake can be deployed identically on-premises and on AWS, enabling hybrid management. Load simulation provides users with comprehensive global recommendations for performance and capacity load placement. However, HPE lags in Sustainability and Ransomware aspects, does not support larger 60TB/75TB SSD drives, and there is confusion in product-load combinations.

-

Dell Technologies: Leader. After acquiring EMC, Dell offers a flexible full line of storage products, with APEX providing multi-cloud management and orchestration across on-premises and cloud environments. PowerMax and PowerStore deliver industry-leading 5:1 data reduction and SLA, integrated with Data Domain data backup. However, Dell does not provide a unified storage operating system suitable for mid-range and high-end, which increases management complexity.

-

Huawei: Challenger. Huawei’s multi-layer Ransomware protection is excellent, using network collaboration. Flash arrays offer a three-year 100% reliability and 5:1 capacity reduction guarantee. NVMe SSD FlashLink supports high disk capacity, accelerated by an ASIC engine. However, Huawei is limited in the North American region, does not offer multi-cloud expansion solutions for AWS, Azure, or GCP, customers are concentrated in a few vertical sectors increasing risk, and multiple storage product licenses are overly complex.

-

Infinidat: Challenger. Infinidat enjoys a good reputation in the high-end global enterprise market, offering high-quality services. SSA Express can consolidate multiple smaller flash arrays into a more cost-effective single InfiniBox hybrid array. Data can be recovered from immutable snapshots after a cyberattack. However, Infinidat lacks mid-range products, the cloud version of InfuzeOS is limited to a single-node architecture, and SSDs only support 15TB drives.

-

Hitachi Vantara: Challenger. Hitachi allows users to upgrade to the next-generation solution within five years of installation to reduce carbon emissions. EverFlex simplifies the subscription process for users, charging based on actual usage. EverFlex Control modularizes features, allowing users to customize according to platform needs. However, Hitachi lags in ransomware detection, does not offer disaggregated scaling of compute and storage, and falls behind in QLC SSDs used for backup.

-

IEIT SYSTEMS: Niche player. IEIT features a unique backplane and four-controller design with autonomous load balancing, scalable up to 48 controllers. It offers online anti-ransomware capabilities through snapshot rollback. The Infinistor AIOps tool provides performance workload planning and simulation. However, IEIT is unknown outside the Chinese market, lags in global multi-cloud expansion, and trails in the independent software vendor (ISV) ecosystem.

-

Zadara: Niche player. Zadara offers global, highly skilled managed services based on low-cost object storage and a disaggregated key-value architecture, using flexible lifecycle management to reduce hardware waste. Hardware in multi-tenant environments can be dynamically reconfigured. However, Zadara’s SLAs are limited, such as in ransomware protection, with smaller commercial scale and coverage, and third-party integrations and ISVs depend on managed service providers.

What functions should primary storage have? Combining the Magic Quadrant report [59], Gartner Primary Storage Critical Capabilities report [64] (2023), and the Enterprise Storage Mainstream Trends report [66] (2023), we can see:

-

Consumption-based Sales Model: Unlike the traditional purchase of complete storage hardware and software, this model is similar to cloud services, charging based on actual consumption. Accordingly, SLAs are redefined according to user-end metrics, such as 99.99% availability. Gartner predicts that by 2028, 33% of enterprises will invest in adopting the Consumption-based model, rapidly growing from 15% in 2024. Related concept: Storage as a Service (STaaS).

-

Cyberstorage: Detection and protection against ransomware are becoming standard for enterprises, including features such as file locking, immutable storage, network monitoring, proactive behavior analysis, and Zero Trust [65]. Gartner predicts that by 2028, two-thirds of enterprises will adopt Cyber liability, rapidly increasing from 5% in 2024.

-

Software-defined Storage (SDS): SDS frees users from vendor-proprietary hardware, providing cross-platform, more flexible management solutions that utilize third-party infrastructure to reduce operational costs. On the other hand, SDS allows for the decoupled deployment of compute and storage resources, independently and elastically scalable, improving economic efficiency. AIOps capabilities become important and are often combined with SDS. The use of public cloud hybrid cloud features becomes common, which are also often categorized under SDS.

-

Advanced AIOps: For example, real-time event streams, proactive capacity management and load balancing, continuous optimization of costs and productivity, responding to critical operational scenarios such as Cyber Resiliency combined with global monitoring, alerting, reporting, and support.

-

SSD / Flash Arrays are growing rapidly. Gartner predicts that by 2028, 85% of primary storage will be flash arrays, gradually increasing from 63% in 2023, while flash prices may drop by 40%. QLC Flash is becoming widespread, bringing ultra-large SSD drives of 60TB/75TB with better power consumption, space, and cooling efficiency.

-

Single Platform for File and Object. For unstructured data, a Unified Storage platform supports both file and object simultaneously. Integrated systems save costs, and multiprotocol simplifies management. Files and objects themselves have similarities; images, videos, and AI corpus files are used similarly to objects, while objects with added metadata and hierarchical paths resemble files.

-

Hybrid Cloud File Data Services. Hybrid cloud provides enterprises with unified access and management across Edge, cloud, and data centers, with consistent namespaces and no need for copying. Enterprises can perform low-latency access and large-scale ingestion at the Edge, complex processing in data centers, and store cold data and backups in the public cloud. It is evident that traditional storage products are moving to the cloud, and public clouds are developing Edge deployments.

-

Data Storage Management Services. Similar to data lakes, data management services read metadata or file content to classify, gain insights, and optimize data. They span multiple protocols, including file, object, NFS, SMB, S3, and different data services such as Box, Dropbox, Google, and Microsoft 365. Security, permissions, data governance, data protection, and retention are also topics of discussion. Against the backdrop of rapid growth in unstructured data, enterprises need to extract value from data and manage it according to importance.

-

Other common features include: Multiprotocol support for multiple access protocols. Carbon Emissions continuous measurement, reporting, and energy consumption control. Non-disruptive Migration Service, ensuring 100% data availability during migration from the current array to the next. NVMoF (NVMe over Fabric) is a native NVMe SAN network. Container Native Storage provides native storage mounting for containers and Kubernetes. Captive NVMe SSD, similar to Direct Attached drives, customized for dedicated scenarios to enhance performance and endurance.

Additionally,

-

Key user scenarios that primary storage needs to support include OLTP online transaction processing, virtualization, containers, application consolidation, hybrid cloud, and virtual desktop infrastructure (VDI).

-

主存储的 关键能力指标:性能,存储效率,RAS(Reliability, availability and serviceability),Scalability,Ecosystem,Multitenancy and Security,Operations Management。

Another way to understand the required functions of primary storage is through user feedback. How do users perceive our products? [67] lists feedback from user interviews about likes and dislikes regarding a certain storage product. [68] provides a common user tender document. From these, some easily overlooked aspects can be seen:

-

Usability. For example, simple configuration and convenient management hold an important place in users’ minds, comparable to performance and cost factors. For enterprise users, permission management and integration with other commonly used systems and protocols are also crucial, such as file sharing and Active Directory. Customer service and support can translate into real monetary value.

-

Resource Efficiency. Storage deployed in users’ local data centers often faces issues of idle resources or some resources being exhausted while others remain unused. Expansion is a common demand and needs to be compatible and integrated with legacy systems. Cloud-like load migration, balancing, and continuous optimization are very useful. Disaggregated scaling and purchasing resources separately, avoiding bundling, can bring economic benefits to users.

-

The screenshot includes only part of the user feedback; the full text can be found in [67][68] original sources.

What is the future development direction of primary storage technology? This can be learned from Gartner’s Hype Cycle. The following figure comes from [69][70], with different classifications. It can be seen that:

-

Object Storage, Distributed File Systems, and Hyperconvergence have been validated. DNA Storage, Edge Storage, Cyberstorage, Computational Storage, and Container Storage and Backup are emerging.

-

Distributed Hybrid Infrastructure (DHI) and Software-Defined Storage (SDS) are technologies poised to bring transformation. DHI provides cloud-level solutions for users’ on-premises data centers, such as consumption-based models, elasticity, resource efficiency, and seamless connectivity with external public clouds and Edge clouds. Its related concept is Hybrid Cloud.

-

The Hype Cycle of Storage and Data Protection chart is similar. Hybrid Cloud Storage is similar to DHI. Immutable Data Value falls under Cyberstorage. Enterprise Information Archiving belongs to archival storage, which is also a validated technology and will be discussed in the next section.

A. Backup and Archival Storage

The first question is, how large is the market size and growth rate for backup and archival storage?

- Market Research Future predicts [72]enterprise backup storage will have a market size of about $27.6B in 2024, growing thereafter at an annual rate of approximately 11.2%. Market growth is mainly driven by data volume growth, data protection, and the demand for ransomware protection.

- As part of enterprise backup storage, archival storage holds a smaller share but grows faster. Grand View Research forecasts [73] a market size of about $8.6B in 2024, with an annual growth rate of approximately 14.1% thereafter. Market growth is primarily driven by data volume increase, stricter compliance requirements, data management, and security.

The next question is, who are the main market players in backup and archival storage? From Gartner’s Magic Quadrant for Enterprise Backup and Recovery Software Solutions [74] (2023) [75] (2024), the leading players in this market can be identified:

-

Commonvault: Leader. BaaS coverage is extensive, including SaaS applications, multi-cloud, and on-premises deployment, supporting Oracle OCI. Backup & Recovery interoperability is good. Commonvault brings enterprise-level features at a competitive price. However, Commonvault’s innovation in on-premises deployment lags behind the cloud, with some users reporting poor experience, and the HTML5 user interface lacks features compared to the on-premises application.

-

Rubrik: Leader. Rubrik innovates in product pricing portfolios, such as offering capacity-based user tiers for Microsoft 365. Rubrik excels in ransomware protection, including machine learning and anomaly detection in backup data. Rubrik’s scalability and excellent customer service continue to attract large enterprises. However, Rubrik needs to balance investments in security and backup, with limited SaaS application coverage and optional cloud storage mainly on Azure Storage.

-

Veeam: Leader. Veeam has a loyal user base and the Veeam Community. Veeam supports hybrid cloud and all major public clouds. Veeam has a large number of partners worldwide. However, Veeam is slow to respond to market demands for BaaS, SaaS, and Ransomware; the software is overly complex, and implementing a secure platform deployment requires careful design and configuration.

-

Cohesity: Leader. Helios is a SaaS-based centralized control plane that provides a unified, intuitive management experience for all backup products. DataProtect and FortKnox allow users to choose multiple public cloud storage locations. Cohesity actively forms Data Security Alliances with vendors from different fields. However, Cohesity’s new investments introduce third-party technology dependencies, its Backup as a Service (BaaS) capabilities are insufficient, and its geographic coverage is limited.

-

Veritas: Leader. Veritas offers comprehensive backup products, such as cloud and scale-out & scale-up solutions. NetBackup and Alta services support cloud-native operations and run Kubernetes on public clouds. Services and partners cover the globe. However, some of Veritas’s cloud products are still in early stages, it focuses on large enterprises and is less friendly to small and medium businesses, and it lacks SaaS application support (Microsoft Azure AD, Azure DevOps, Microsoft Dynamics 365, and GitHub).

-

Dell Technologies: Leader. PowerProtect provides data protection and Ransomware protection, supporting both on-premises and cloud deployments. It allows users to balance capacity across multiple appliances. It offers consistent management across multiple public clouds and is available on the Marketplace. However, Dell lacks a SaaS control plane, does not support alternative backup storage options, and advanced Ransomware analysis requires a dedicated environment.

-

Others: challengers, visionaries, and niche players. Briefly mentioned, see the original report [74] for details.

The next question is, what are the key features required for backup and archiving products? From [74], a series of Core Capabilities and Focus Areas can be seen:

-

Backup and Data Recovery: Based on this foundation, support for on-premises data centers and public cloud. Support for point-in-time backups, business continuity, disaster recovery, and other scenarios. Configure multiple backup and retention policies aligned with company policies. Tier cold and hot backup data to different locations, such as public cloud, third-party vendors, and object storage. Global deduplication and data reduction.

-

Cyberstorage: Backup data to immutable storage, Immutable Data Vault. Detect and defend against ransomware. Support disaster and attack recovery testing and drills. Provide protection for different targets such as containers, object storage, and Edge, covering on-premises, cloud, and hybrid cloud environments. Fast and reliable recovery, restoring archives, virtual machines, file systems, bare-metal machines, and different points-in-time.

-

Control Plane: A centralized control plane, unified across different products, unified locally and in hybrid cloud environments. Manages distributed backup and recovery tasks, manages testing and drills. Manages company compliance, data protection, and retention policies. Integrates with common other SaaS products and BaaS products. The control plane should be SaaS-based, cloud-like, rather than requiring users to manage installation and upgrades themselves.

-

Cloud-native: Backup software itself can be deployed cloud-natively, for example on Kubernetes. Data protection covers cloud-native workloads, such as DBaaS, IaaS, and PaaS. Integrates with public cloud services, supports storing data in the cloud, and supports scheduling tasks in the cloud. Backup products provide services in a BaaS manner close to the cloud. Payment is based on actual usage (consumption-based), rather than forcing users to purchase entire appliances.

-

GenAI & ML: Supports generative AI, for example in task management, troubleshooting, and customer support. Supports machine learning, for example for ransomware detection and automatic data classification.

The final question is, what is the future development direction of backup and archiving technology? This can be learned from Gartner’s Hype Cycle [69] (2024), as shown in the figure below. It can be seen that:

- Data archiving, archive-dedicated appliances, and data classification have been validated. Cyberstorage, generative AI, cloud recovery (CIRAS)[76], backup data reuse analysis, and others are emerging.

B. File Storage

File storage holds an important position in enterprises and cloud storage. First, how large is the market size of file storage, and how fast is its growth rate? The VMR report [78] points out,

-

Distributed file systems & object storage had a market size of about $26.6B in 2023, with a compound annual growth rate of approximately 16%. This growth rate is roughly comparable to primary storage, slightly slower than overall cloud storage.

-

In many reports, file systems and object storage are combined in statistics. Indeed, the user scenarios for these two types of storage overlap, and in recent years their development has also absorbed each other’s characteristics. See the “Intertwined” section of this article.

-

Additionally, the Market Research Future report [79] provides the market size for (cloud) object storage alone (object storage is mainly cloud-based). By comparison, the object storage market size in 2024 is only $7.6B, with a annual growth rate lower than that of file storage, about 11.7%.

-

Another report from VMR [81] gives the market size for block storage, which can be used for comparison. In 2023, it was about $12.8B, with an annual growth rate of approximately 16.5%. The growth rate of the block storage market size is faster than that of object storage and similar to file storage.

In Gartner’s Magic Quadrant for file and object storage platforms [77] (2024), the main players in this market can be seen. Note that file systems and object storage are still combined in the statistics. Also note, this mainly targets storage vendors, similar to primary storage, rather than public cloud (public cloud is covered in the “Cloud Storage” section).

-

Dell Technologies: Leader. After acquiring EMC, Dell has the broadest portfolio of software and hardware products, including unstructured data and purpose-built products. Dell has a global supply chain and suppliers. Dell works closely with Nvidia and invests in AI projects. However, PowerScale lacks a global namespace and Edge caching, faces intensified competition from modern flash storage with different architectures, and relies on ISVs to address critical needs.

-

Pure Storage: Leader. FlashBlade uses NVMe QLC SSDs, offering the industry’s highest density and lowest TB power consumption, with pricing competitive compared to HDD hybrid arrays. Evergreen//One and Pure1’s AIOps capabilities and monitoring ensure user SLAs. FlashBlade partners with Equinix Metal to extend on-premises infrastructure globally. However, the Evergreen//Forever program significantly increases capital expenditure, ransomware detection capabilities are limited, and hybrid cloud support is limited, such as deployments on AWS, Azure, and GCP using VMs and containers.

-

VAST Data: Leader. VAST’s strategic partnerships and marketing have greatly increased large customers. VAST uses QLC flash, advanced data reduction algorithms, and high-density racks. End users recognize its excellent customer service, including knowledge, pre-sales, architecture, ordering, and deployment. However, VAST lacks brand-integrated appliances, making it difficult to attract conservative global enterprises; frequent software updates cause instability; it lacks enterprise features such as synchronous replication, Stretched Cluster, Geodistributed Erasure Coding, Active Cyber Defense; and has limited hybrid cloud appeal.

-

IBM: Leader. IBM leads in the HPC market, combined with AI. File and object storage provide a global namespace across data centers, cloud or Edge, and non-IBM storage. IBM continuously enhances Ceph storage, favored by open-source users, unifying file, block, and object storage. However, IBM’s product portfolio is complex, cloud support is insufficient, and file storage tends to focus on HPC rather than general scenarios.

-

Qumulo: Leader. Qumulo offers the simplicity of SaaS and cloud elasticity on Azure. Its software provides consistent functionality and performance both on-premises and in the cloud. Qumulo’s global namespace enables access across on-premises and multiple clouds. However, Qumulo lacks ransomware detection capabilities, does not provide its own hardware and relies on third parties, and has limited global coverage.

-

Huawei: Challenger. OceanStor Pacific offers a unified platform for file, block, and object storage. From AI performance to data management, Huawei possesses proprietary hardware technologies, including chips and flash memory. Customer support and service are highly rated. However, U.S. sanctions and geopolitical issues limit global expansion, support for other public clouds like AWS, Azure, and GCP is limited, and flexible SDS solutions are not offered.

-

Nutanix: Visionary, with foresight surpassing all leaders. The NUS platform can consolidate various user storage workloads and centrally manage them under hybrid cloud. NUS simplifies implementation, operations, monitoring, and scaling management. Customer support services are recognized for reliability and responsiveness. However, the hyperconverged platform is not favored by users who only want to purchase storage, file and object storage have limited acceptance in hybrid cloud deployments, and it does not support RDMA access to NFS, making it unsuitable for low-latency scenarios.

-

WEKA: Visionary. The parallel file system is suitable for the most demanding large-scale HPC and AI workloads. The converged mode allows the file system and applications to run on the same server, improving GPU utilization. Hybrid cloud is widely available across public clouds and Oracle OCI. However, backup and archiving solutions are not cost-effective, S3 and object support are limited, and it lacks ransomware protection, AIOps, synchronous replication, data efficiency guarantees, and geographically distributed object storage.

-

Scality: The Visionary. Scality’s RING architecture supports EB-level deployments, with independent scalability of performance and capacity. Scality pursues a pure software solution that can run on a wide range of standard hardware, whether at the Edge or in data centers. RING data protection supports geographic distribution across multiple availability zones, with zero RPO/RTO, and extremely high availability and durability. However, as an SDS solution, it relies on external vendors and lacks the capability to deliver turnkey appliances; files are implemented via POSIX integrated with object storage, making it unsuitable for HPC.

-

Others: Participants in specific fields. Briefly mentioned, see the original [77] report for details.

What are the main features that file and object storage systems should have? A series of Core Capabilities and Top Priorities can be seen from the Gartner Magic Quadrant report, as listed below. On the other hand, it can be observed that these are largely consistent and similar to the main features of primary storage, backup, and archival storage.

-

Global Namespace: Unified management and access of files across local data centers, Edge, and multiple public clouds. Supports geographic distribution and replication protection. Supports hybrid cloud, S3, and multiple file access protocols. Unified Storage: Files, blocks, and objects are served by a unified platform. A single platform handles high performance and data lakes.

-

AIOps: Supports AIOps, simplified and unified management configuration, and automation. Provides excellent customer service in knowledge and architectural solutions. Data management, such as metadata classification, cost optimization, data migration, analysis, and security. Data lifecycle management. Metadata indexing, file and object labeling/tagging. Software-defined storage (SDS).

-

Cyberstorage: Provides ransomware detection and protection, maintaining business continuity during attacks. Response and data recovery. Of course, traditional security features such as data encryption and authentication are essential.

-

Cost and Performance: Uses QLC flash with advantages in capacity and power consumption. Increases rack storage density. Data reduction technologies such as deduplication, compression, and erasure coding, as well as data efficiency guarantees. Uses flash or SSD to accelerate file access, provides caching, and performs data reduction on flash. RDMA access reduces latency, Edge storage reduces latency. Supports linear scaling, supports separate scaling of performance and capacity, and properly handles performance and capacity bursts. STaaS model with consumption-based payment. Manages power consumption and carbon emissions.

-

Different User Scenarios: General file systems, databases, objects (or using files in an object manner), HPC, and AI represent different user scenarios, each with trade-offs in functionality and performance. See the “Enterprise Files - Data Volume” chart from Nasuni below [80].

Files are also one of the primary storage functions. Regarding future development trends and the Hype Cycle, they will not be repeated here. Refer to the “Primary Storage” section.

B. Object Storage

Object storage and file storage are often combined in statistics due to their similar functions, such as in Gartner’s Magic Quadrant for File and Object Storage Platforms [77]. The previous section “File Storage” already included object storage, so it will not be repeated here.

On the other hand, the classic scenario for object storage is cloud storage, as mentioned in the previous section “Cloud Storage,” which will not be repeated here. The functionalities required for cloud storage can also be benchmarked against storage vendors, as seen in the “File Storage” section.

B. Block Storage

The VMR report [81] provides the market size and growth rate for block storage, which has already been included in the chart in the “File Storage” section.

Block storage is one of the core functions of primary storage and is usually combined into primary storage statistics, which have already been covered in the “Primary Storage” section and will not be repeated here. Modern platforms are often unified storage systems, simultaneously providing file, block, and object services.

On the other hand, block storage is one of the classic scenarios of cloud storage, as mentioned in the previous section “Cloud Storage”, and will not be repeated here.

B. Database

What is the market size and growth rate of databases? According to the forecast from Grand View Research:

-

Databases had a market size of approximately $100.8B in 2023, with an annual growth rate of 13.1% [82]. Among them, the global cloud database market was about $15.05B in 2022, with an annual growth rate of 16.3% [83].

-

Compared with the previous section “Cloud Storage”, it can be found that: 1) The main market for databases is non-cloud. 2) Cloud data grows faster than non-cloud, but far less than cloud storage (21.7%). 3) The cloud storage market is much larger than the database market.

Based on the market size and growth rates from the previous sections, various storage types can be plotted and compared, showing:

-

Cloud storage has the largest market size and the fastest growth rate (21.7%), offering good investment value. Next is the non-cloud database market, which is large in size but has a lower growth rate (13.1%).

-

The markets for file storage, block storage, and cloud databases are smaller but have decent growth rates (16%~17%). Meanwhile, object storage is weaker, with a small market size and a lower growth rate (11.7%).

-

In backup and archival storage, archival storage (14.1%) is growing faster than backup storage (11.21%). The former is growing quickly, while the latter has a larger existing volume.

Zoomed-in section of the lower market scale:

Although databases store data, in market segmentation, databases are generally not classified as part of the “storage” market. Storage usually refers to files, blocks, and objects, while databases operate on file and block storage. Databases have a large and complex content and a persistently active market, worthy of a separate article, whereas data lakes span both database and storage attributes (structured and unstructured data).

This article focuses on storage and therefore does not delve further into databases. The following is listed only:

- Gartner Cloud Database Magic Quadrant (2024)[84].

- Gartner Data Management Technology Hype Cycle (2023)[71].

C. SSD Storage

Market Research Future predicts[85] that enterprise flash storage will have a market size of approximately $67.17B in 2025, with an annual growth rate of about 9.89%.

Flash storage is commonly used for primary storage, file storage, and block storage, which are more common classifications in analytical reports and have been discussed in previous sections, so they will not be repeated here. Compared to SSD, flash is the storage medium, while SSD usually refers to the storage drive packaged with a controller.

C. HDD Storage

Market Research Future predicts [87] that the HDD market size will be about $62.43B in 2024, with an annual growth rate of approximately 6.1%. Note, this is the market for disks, not storage. The HDD market is facing decline, mainly due to being replaced by SSDs.

HDD storage is commonly used for primary storage, hybrid (flash) arrays, object storage, and backup systems. The latter is a more common classification in analytical reports rather than categorizing by SSD/HDD, and storage often does not rely on a single medium. These have been discussed in previous sections and will not be repeated here.

C. Tape Storage

Market Research Future predicts [86] that tape storage will have a market size of approximately $3.5B in 2024, with an annual growth rate of about 5.82%. Compared to SSD and HDD, the market size of tape is small and its growth rate is low.

Tape is commonly used for archival storage. The latter has been discussed in previous sections and will not be repeated here.

C. Memory Storage

Memory storage is generally used for databases or caches. Storage is usually not purely memory-based because it is difficult to ensure data persistence, especially during power outages in data centers. Memory in storage systems is typically used for serving metadata or indexes and is not independent of other storage categories. Therefore, this section will skip memory storage.

Market Analysis

The previous chapter covered the main segments of the storage market, key participants, their products, core product requirements, and possible future directions. This chapter will continue to delve deeper. Focusing on the market, it can reveal its structure and growth potential, driving factors, and core value.